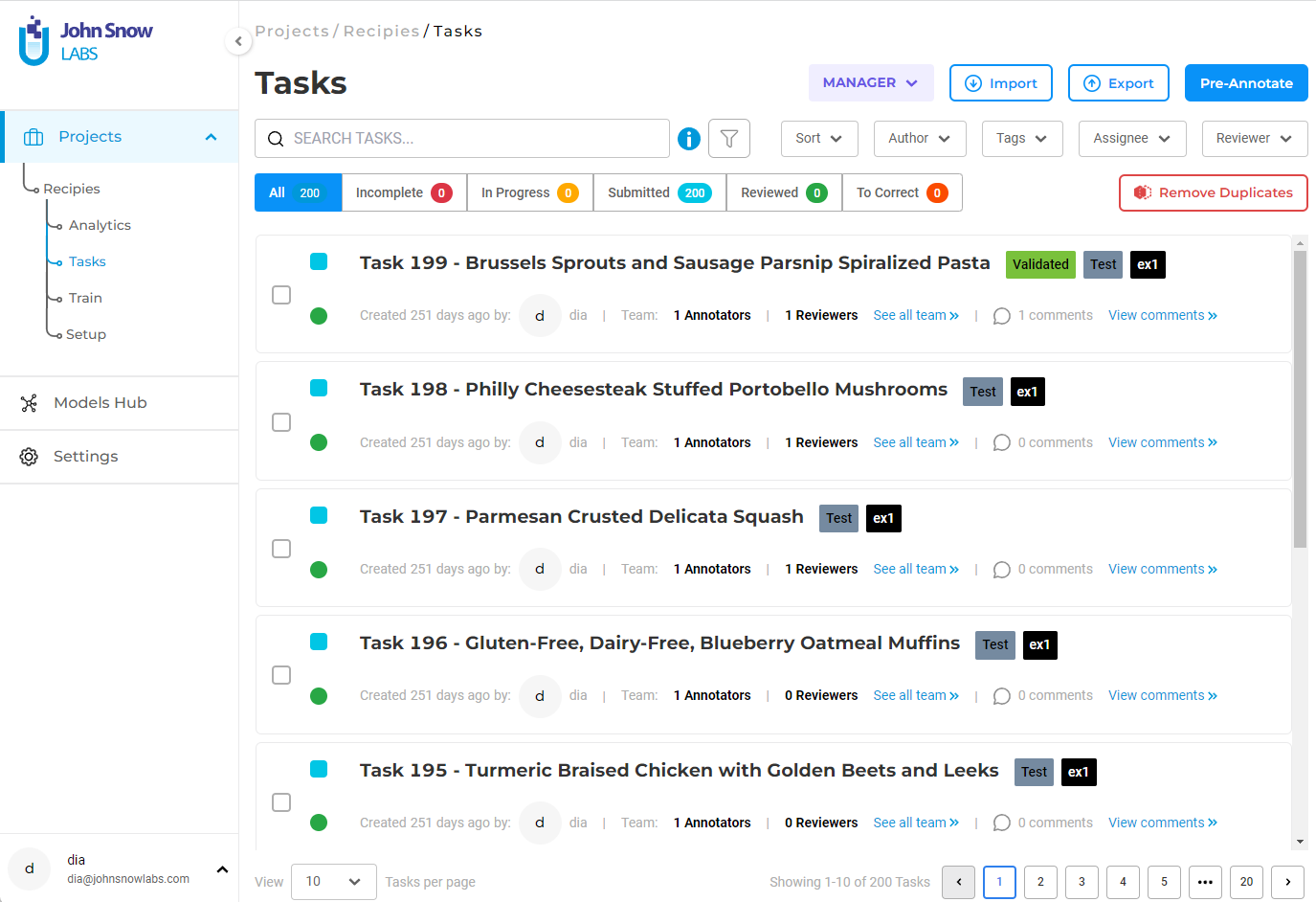

The Tasks screen displays a list of all documents imported into the current project. Each task includes metadata such as import time, importing user, and the annotators and reviewers assignedk.

Task Assignment

Project Owners and Managers can assign tasks to annotators and reviewers to manage workloads effectively. Annotators and reviewers only see the tasks assigned to them, preventing accidental overlap.

To assign a task:

- Navigate to the task page.

- Select one or more tasks.

- Use the Assign dropdown to choose the annotator(s).

You can only assign a task to annotators that have already been added to the project team. For adding an annotator to the project team, select your project and navigate to the Setup > Team menu item. On the Add Team Member page, search for the user you want to add, select the role you want to assign to him/her and click on Add To Team button.

Project Owners can also be explicitly assigned as annotators and/or reviewers for tasks. It is useful when working in a small team and when the Project Owners are also involved in the annotation process. A filter option Only Assigned checkbox is available on the labeling page that allows Project Owners to filter the tasks explicitly assigned to them when clicking the Next button.

NOTE: When upgrading from an older version of the Generative AI Lab, the annotators will no longer have access to the tasks they worked on unless they are assigned to those explicitely by the admin user who created the project. Once they are assigned, they can resume work and no information is lost.

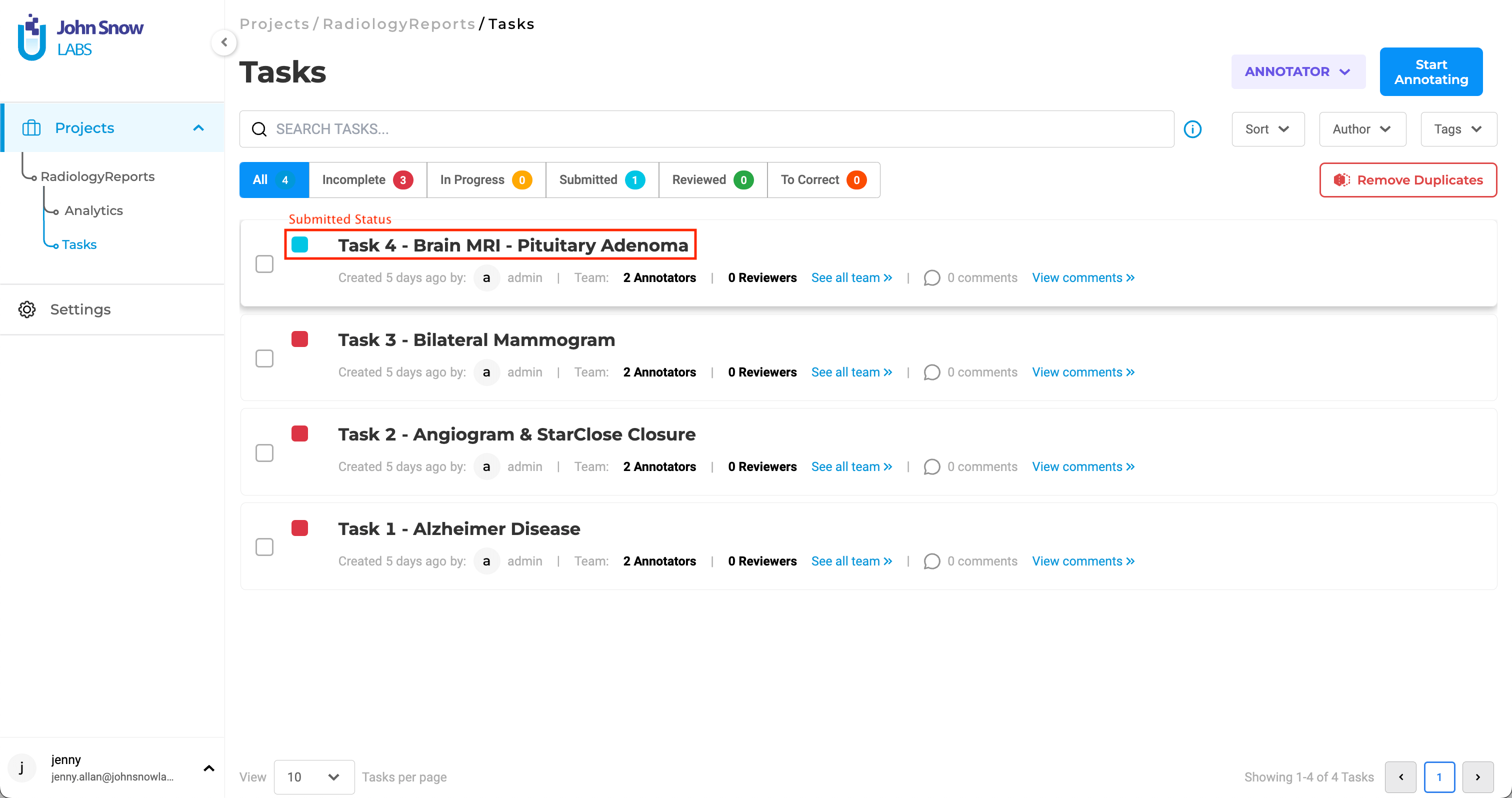

Task Statuses

Each task progresses through several statuses based on the work completed:

- Incomplete, when none of the assigned annotators has started working on the task.

- In Progress, when at least one of the assigned annotators has submitted at least one completion for this task.

- Submitted, when all annotators which were assigned to the task have submitted a completion which is set as ground truth (starred).

- Reviewed, in the case there is a reviewer assigned to the task, and the reviewer has reviewed and accepted the submited completion.

- To Correct, in the case the assigned reviewer has rejected the completion created by the Annotator.

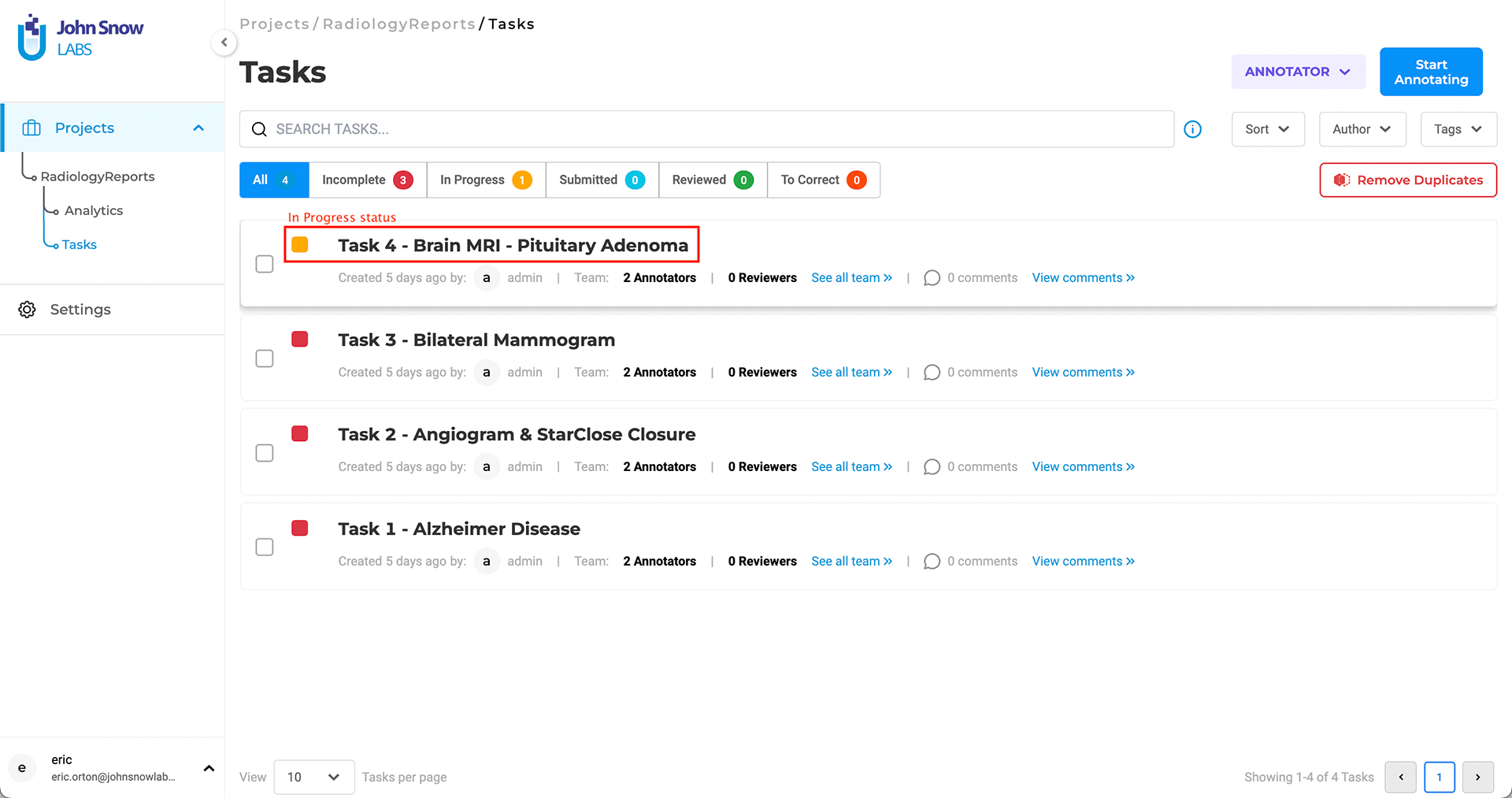

The status of a task varies according to the type of account the logged in user has (his/her visibility over the project) and according to the tasks that have been assigned to him/her.

For Project Owner, Manager and Reviewer

On the Analytics page and Tasks page, the Project Owner/Manager/Reviewer will see the general overview of the projects which will take into consideration the task level statuses as follows:

- Incomplete - Assigned annotators have not started working on this task

- In Progress - At least one annotator still has not starred (marked as ground truth) one submitted completion

- Submitted - All annotators that are assigned to the task have starred (marked as ground truth) one submitted completion

- Reviewed - Reviewer has approved all starred submitted completions for the task

For Annotators

On the Annotator’s Task page, the task status will be shown with regards to the context of the logged-in Annotator’s work. As such, if the same task is assigned to two annotators then:

- if annotator1 is still working and not submitted the task, then he/she will see task status as In-progress

- if annotator2 submits the task from his/her side then he/she will see task status as Submitted

The following statuses are available on the Annotator’s view.

- Incomplete – Current logged-in annotator has not started working on this task.

- In Progress - At least one saved/submitted completions exist, but there is no starred submitted completion.

- Submitted - Annotator has at least one starred submitted completion.

- Reviewed - Reviewer has approved the starred submitted completion for the task.

- To Correct - Reviewer has rejected the submitted work. In this case, the star is removed from the reviewed completion. The annotator should start working on the task and resubmit.

Note: The status of a task is maintained/available only for the annotators assigned to the task.

When multiple Annotators are assigned to a task, the reviewer will see the task as submitted when all annotators submit and star their completions. Otherwise, if one of the assigned Annotators has not submitted or has not starred one completion, then the Reviewer will see the task as In Progress.

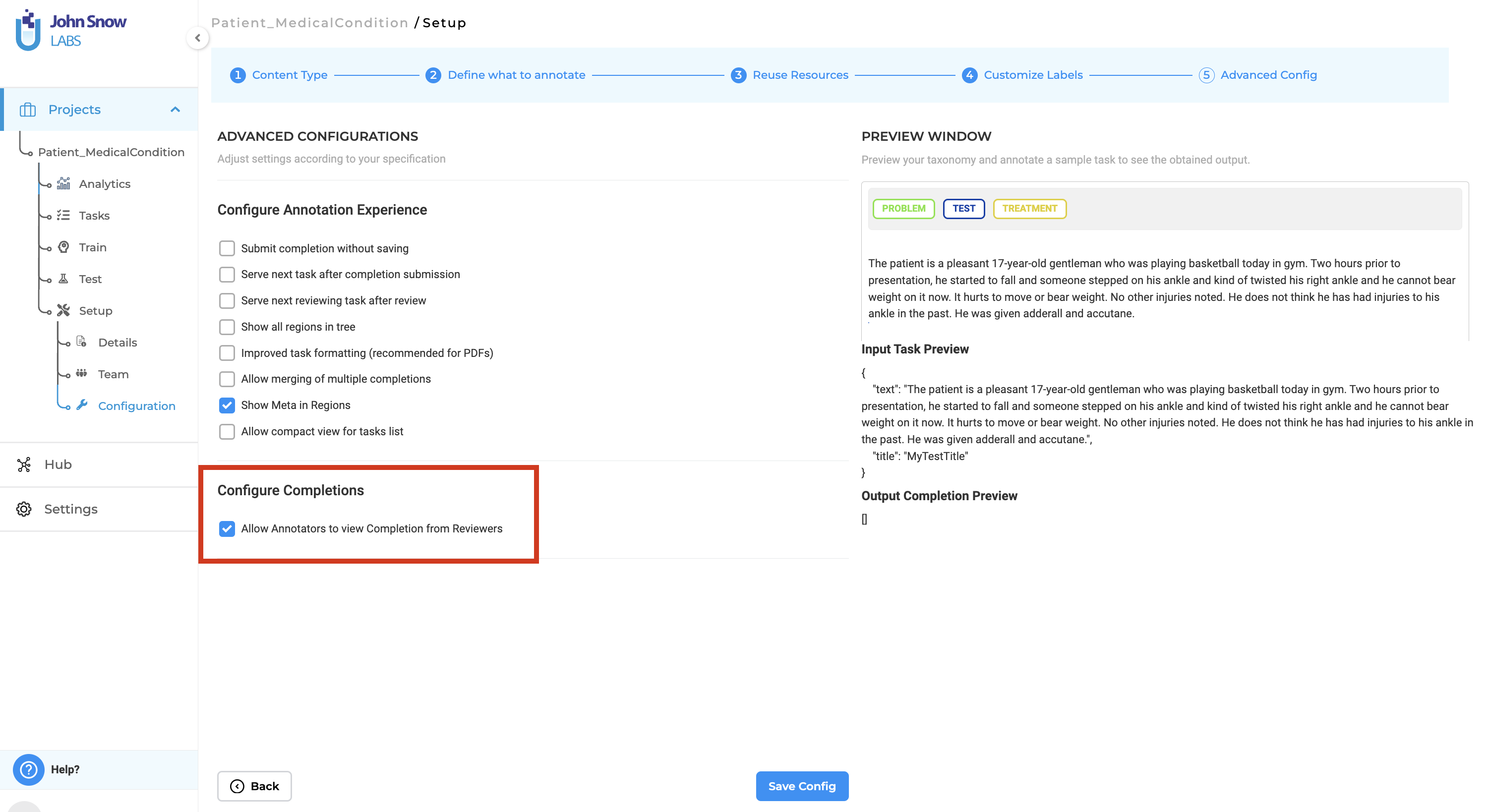

Reviewer Feedback Visibility

Annotators can now view corrections and comments added by reviewers, enhancing transparency and collaboration.

How it works:

- Reviewer Clones Submission: Reviewers can clone the annotator’s submission and make necessary corrections or add comments directly in the text using the meta option for the annotated chunks.

- Submit Reviewed Completion: The reviewer submits the cloned completion with corrections and comments.

- Annotator Reviews Feedback: The annotator whose submission was reviewed can view the reviewer’s cloned completion and see the comments and corrections made.

- Implement Changes: The annotator can then make the required changes based on the detailed feedback provided by the reviewer.

To Enable:

- The project manager must enable the option “Allow annotators to view completions from reviewers” in the project settings.

>

>

Benefits:

- Enhanced Feedback: Reviewers can now make precise corrections and add detailed comments on individual labels, helping annotators understand exactly what changes are needed.

- Improved Collaboration: Annotators can better align their work with the reviewer’s expectations by viewing the reviewer’s cloned submission.

- Quality Control: This feature helps in maintaining a higher standard of annotations by ensuring that feedback is clear and actionable.

Task Ranking and Display Preferences

Generative AI Lab supports prioritizing and organizing tasks through ranking scores and persistent display preferences.

Task Ranking (Priority Score)

Each task can include an optional priority or ranking score field, which helps teams focus on the most important or urgent cases first.

If a ranking score is provided during task import (for example, as a field in the import JSON or CSV), it will automatically appear as a Rank column in the task list.

Users can:

- Sort tasks by ranking to bring high-priority documents to the top.

- Filter or visually identify high-value tasks for review or QA.

This feature enables project managers and reviewers to efficiently manage workflows, ensuring that critical items receive attention first.

Persistent “Tasks per Page” Setting

The Tasks per page preference is now remembered across sessions.

When a user selects a preferred number of visible tasks from the dropdown, that setting is saved and automatically applied the next time they open the Tasks page—ensuring a consistent viewing experience without needing to reselect options every time.

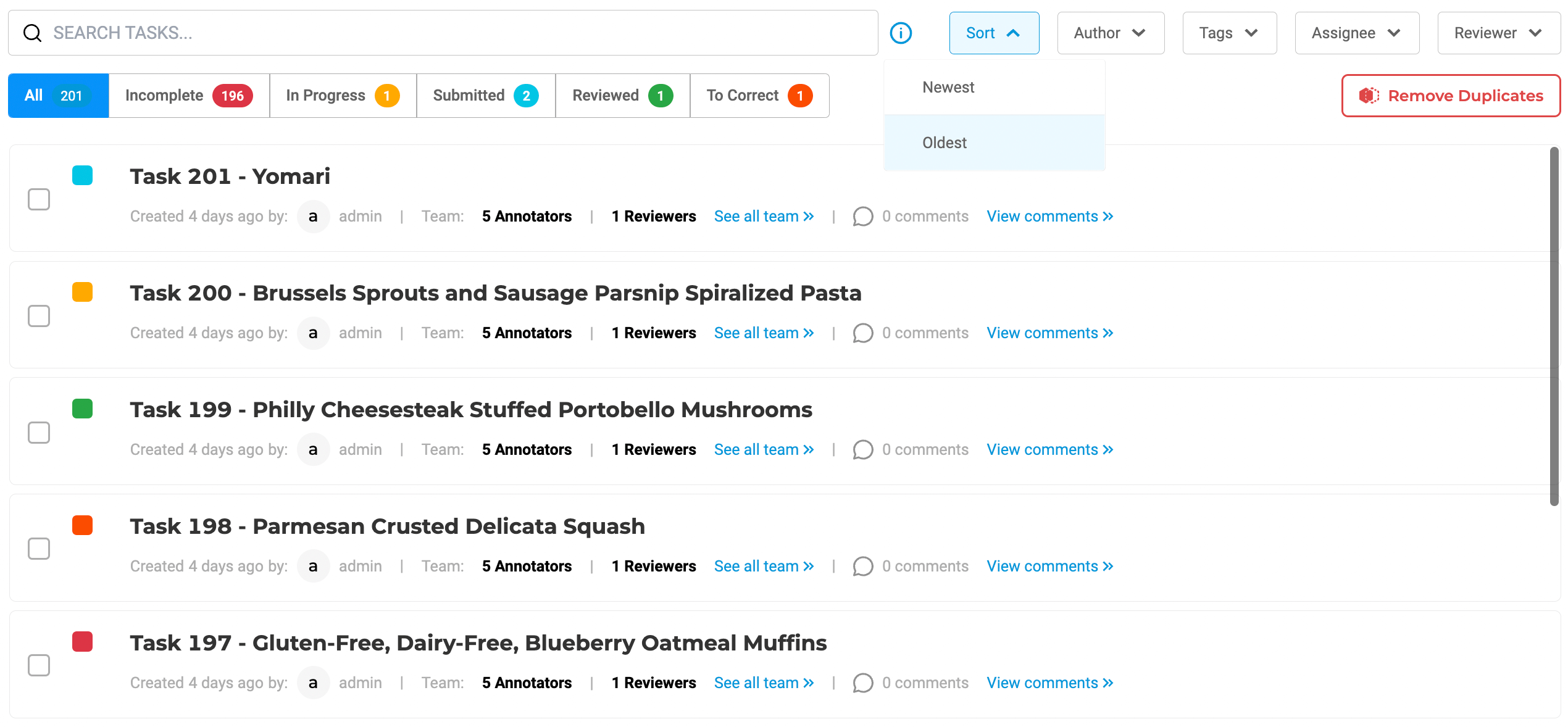

Task Filters

As normally annotation projects involve a large number of tasks, the Task page includes filtering and sorting options which will help users identify the tasks he/she needs. Tasks can be sorted by time of import ascending or descending.

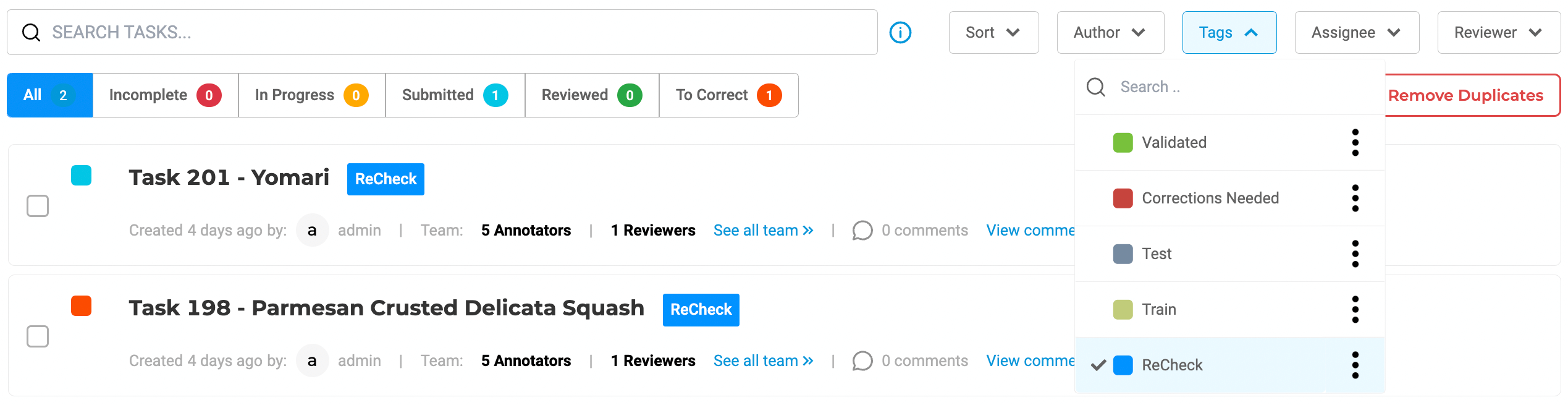

Tasks can be filtered by the assigned tags, by the user who imported the task and by the status.

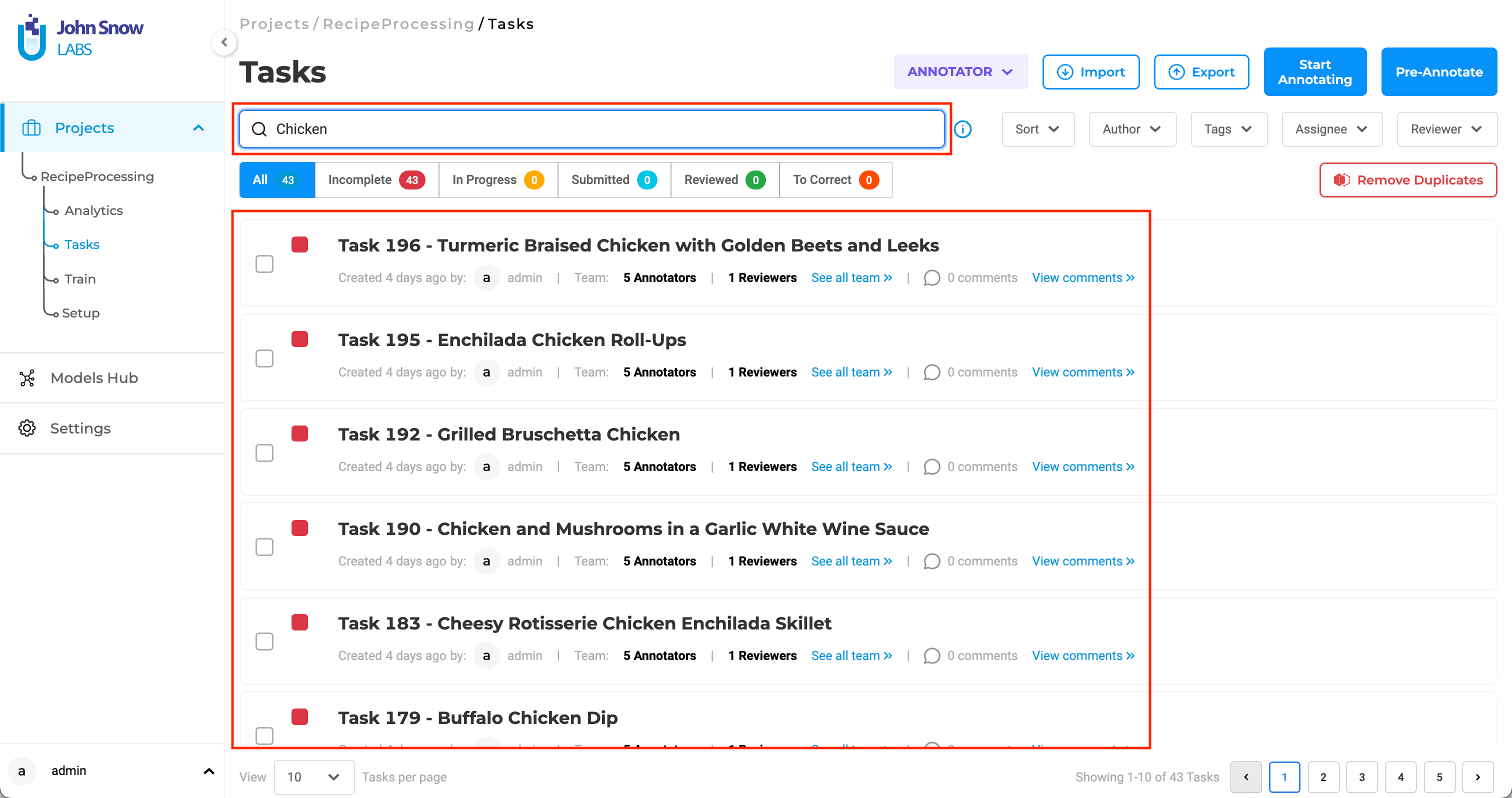

There is also a search functionality which will identify the tasks having a given string on their name.

The number of tasks visible on the screeen is customizable by selecting the predefined values from the Tasks per page drop-down.

Multiple tag filters can be selected to enhance filtering precision.

Task Search by Text, Label and Choice

Generative AI Lab offers advanced search features that help users identify the tasks they need based on the text or based on the annotations defined so far. Currently supported search queries are:

- text: patient -> returns all tasks which contain the string “patient”;

- label: ABC -> returns all tasks that have at least one completion containing a chunk with label ABC;

- label: ABC=DEF -> returns all tasks that have at least one completion containing the text DEF labeled as ABC;

- choice: Sport -> returns all tasks that have at least one completion which classified the task as Sport;

- choice: Sport,Politics -> returns all tasks that have at least one completion containing multiple choices Sport and Politics.

Search functionality is case insensitive, thus the following queries label: ABC=DEF , label: Abc=Def or label: abc=def are considered equivalent.

Example:

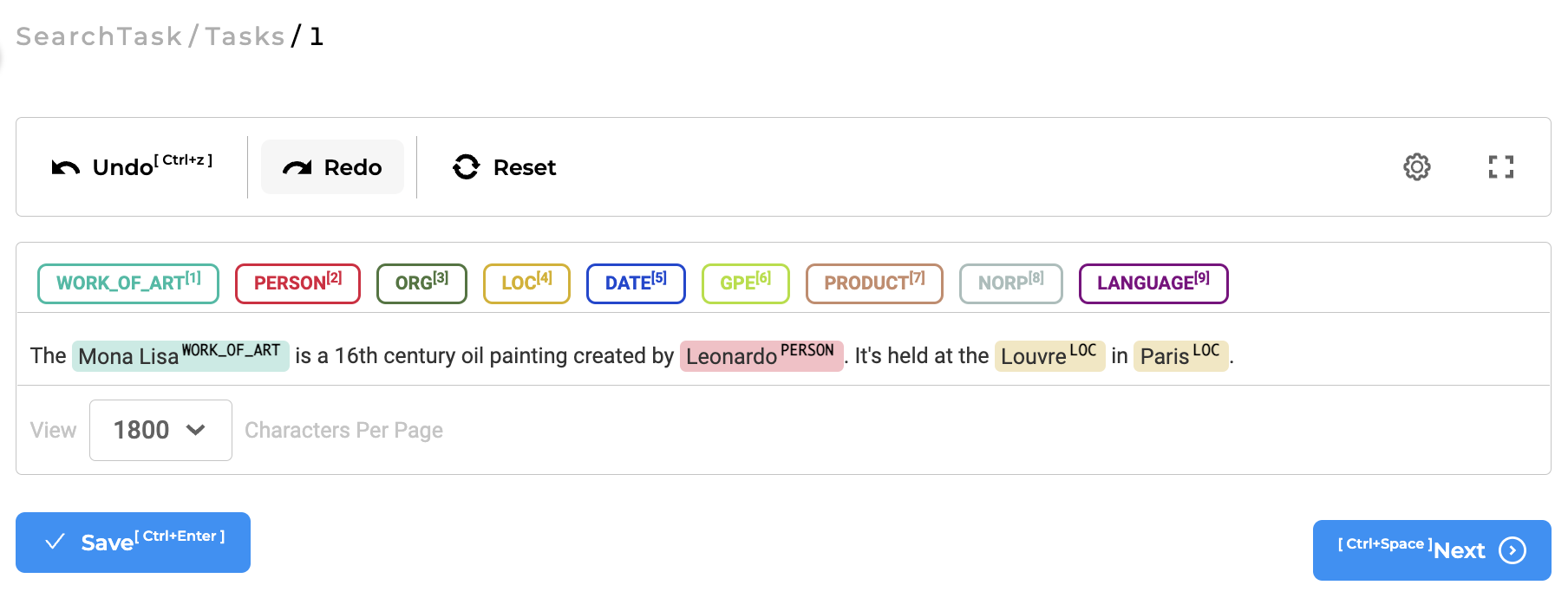

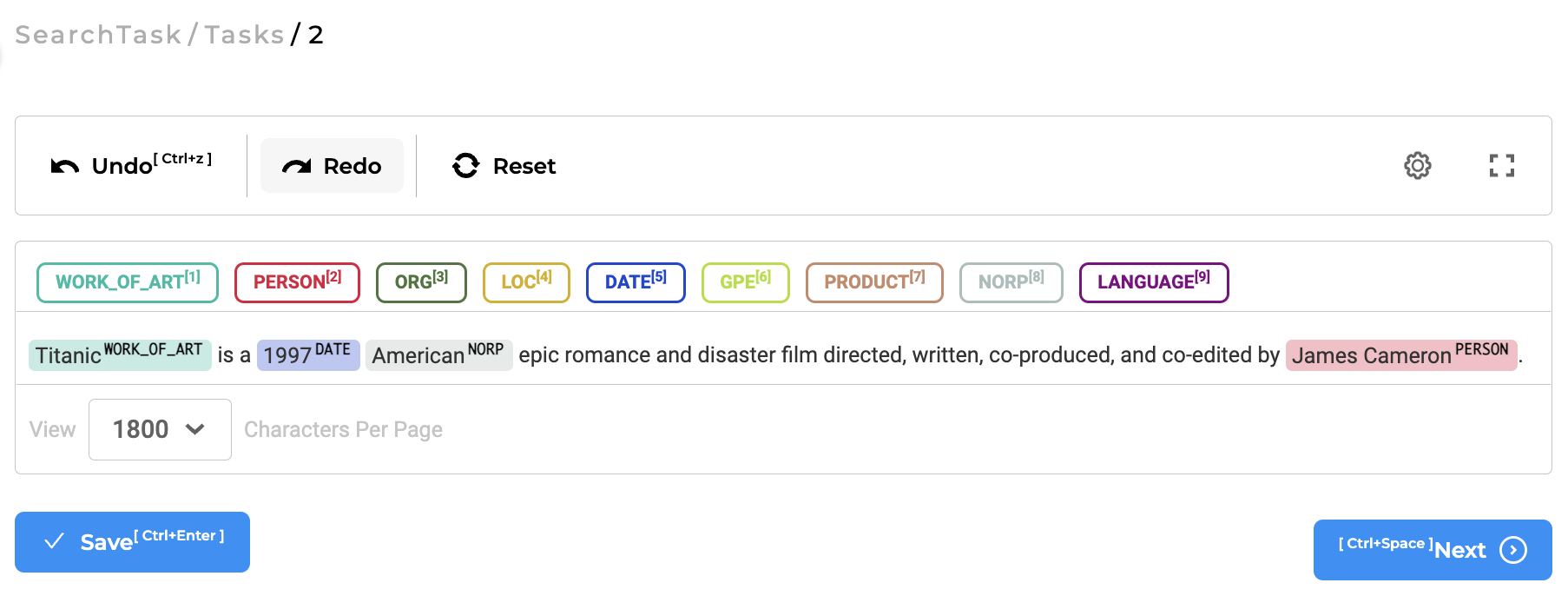

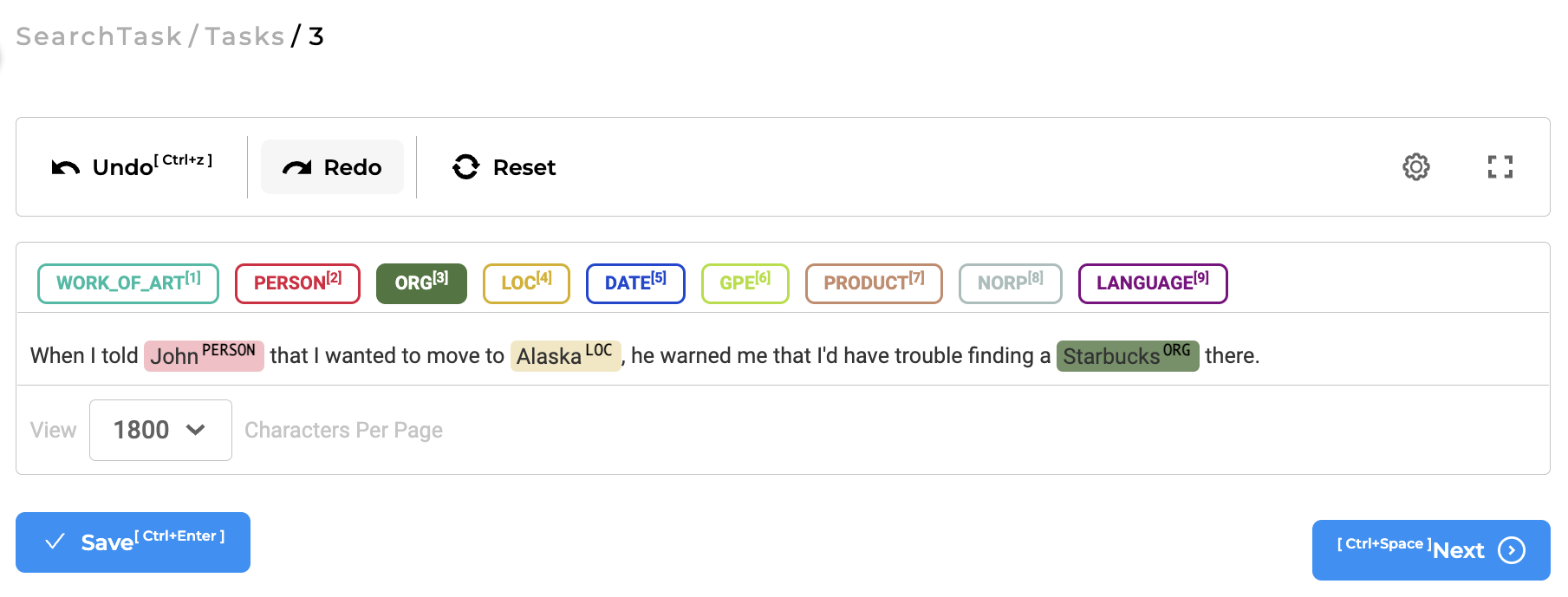

Consider a project with 3 tasks which are annotated as below:

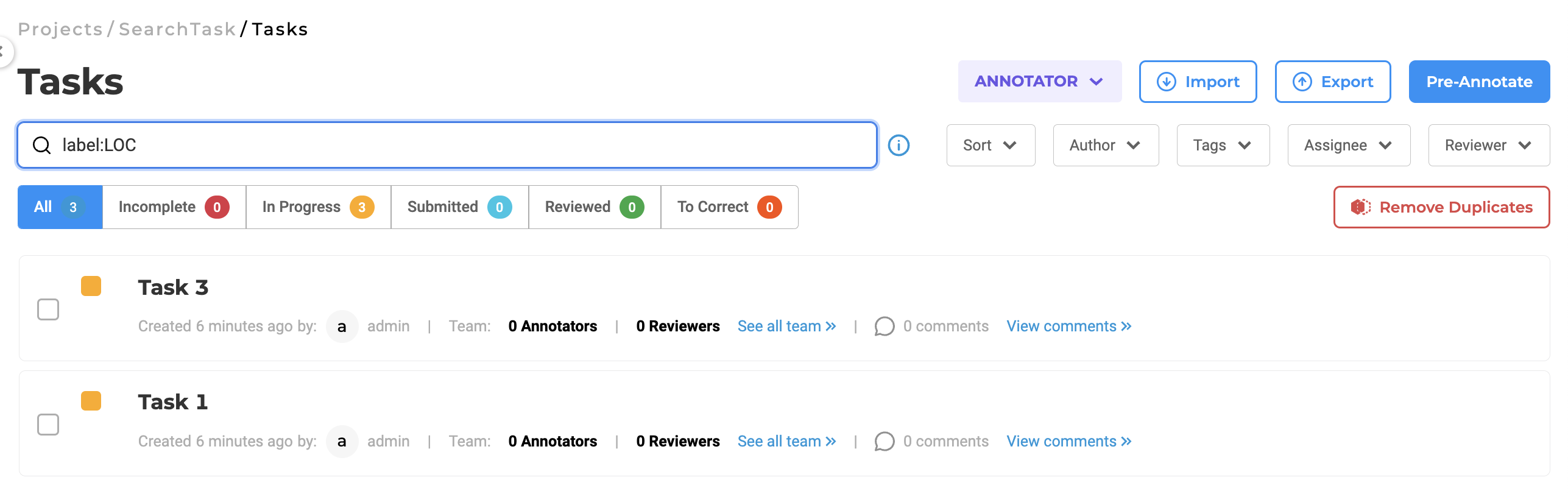

Search-query “label:LOC” will list as results Task 1 and Task 3.

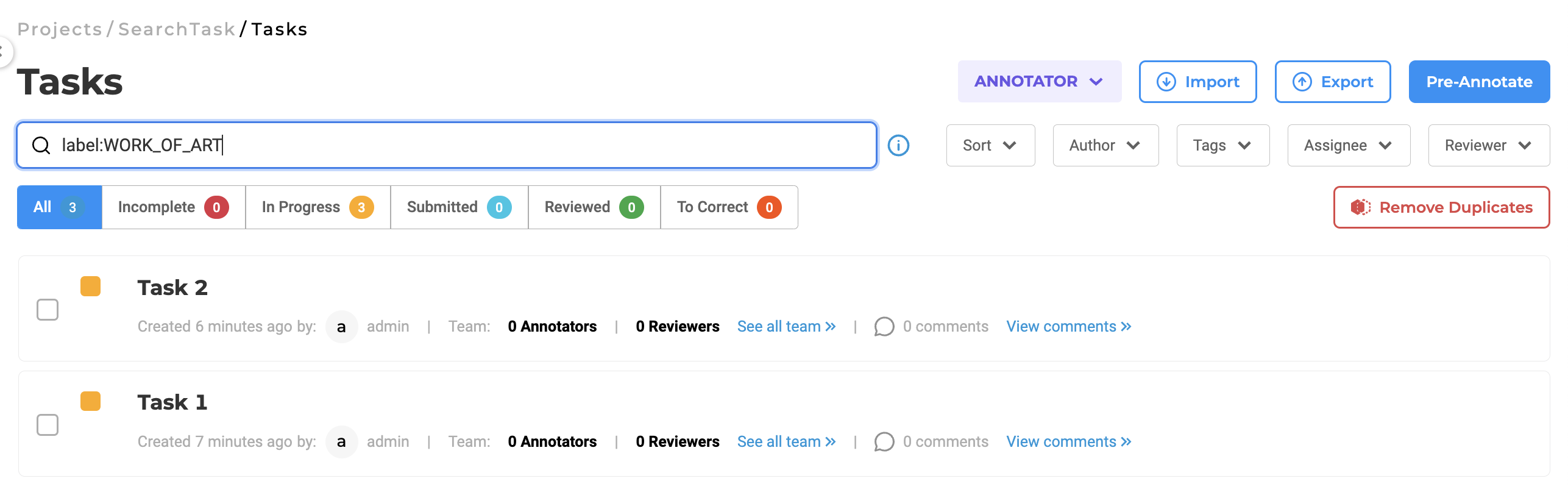

Search-query “label:WORK_OF_ART” will list as result Task 1 and Task 2.

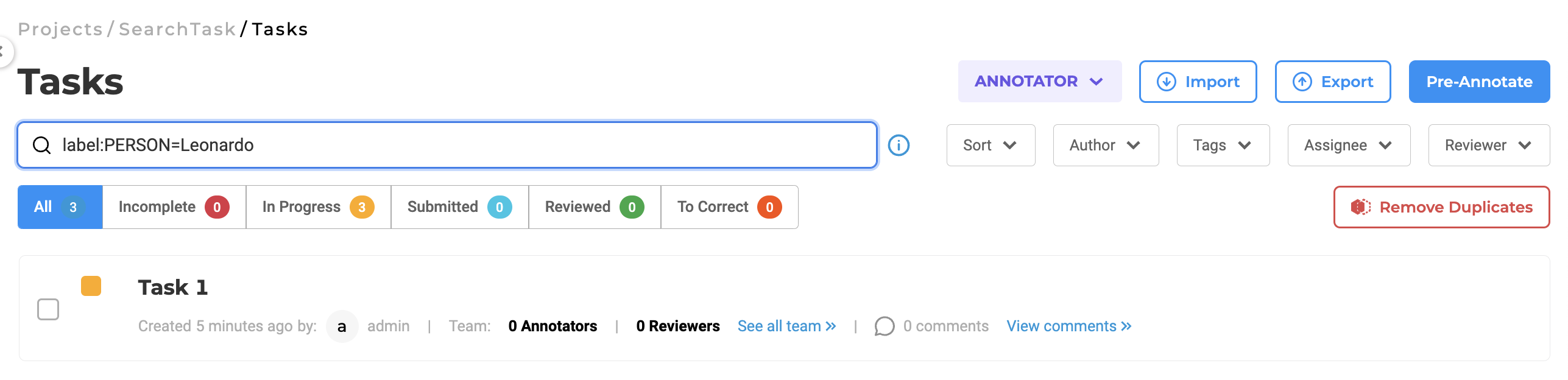

Search-query “label:PERSON=Leonardo” will list as result Task 1.

Comments

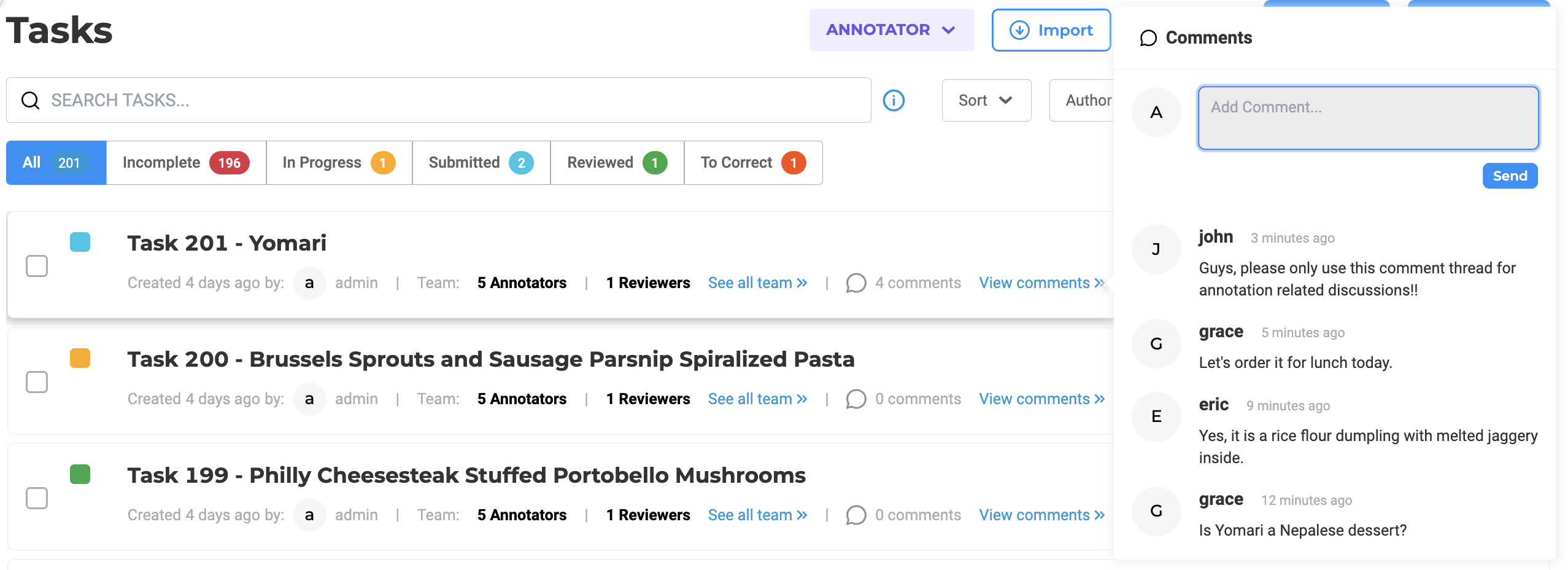

Comments can be added to each task by any team member. This is done by clicking the View comments link present on the rightmost side of each Task in the Tasks List page. It is important to notice that these comments are visible to everyone who can view the particular task.

Bulk Task Assignment

Bulk assignment allows up to 200 unassigned tasks to be distributed at once.

Key Capabilities

- Sequential or Random Assignment: Tasks can be distributed in order or randomly from the unassigned pool.

- Efficient Scaling: Reduces manual effort in large-scale projects, optimizing resource utilization.

User Benefit: Accelerates task distribution, improving operational efficiency and annotation throughput.

Bulk Assignment Process

- Click on the “Assign Task” button.

- Select the annotators to whom you wish to assign tasks.

- Specify the number of tasks to assign to each annotator.

- Define any required criteria for task selection.

- Click on “Assign” to complete the process.

Notes - The maximum number of tasks that can be assigned at once is 200. - If the total number of unassigned tasks is less than the selected task count, all unassigned tasks will be assigned to the first selected annotator.