PDF processing

Next section describes the transformers that deal with PDF files with the purpose of extracting text and image data from PDF files.

PdfToText

PDFToText extracts text from selectable PDF (with text layout).

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | text | binary representation of the PDF document |

| originCol | string | path | path to the original file |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| splitPage | bool | true | Whether it needed to split document to pages |

| textStripper | TextStripperType.PDF_TEXT_STRIPPER | Extract unstructured text | |

| sort | bool | false | Sort text during extraction with TextStripperType.PDF_LAYOUT_STRIPPER |

| partitionNum | int | 0 | Force repartition dataframe if set to value more than 0. |

| onlyPageNum | bool | false | Extract only page numbers. |

| extractCoordinates | bool | false | Extract coordinates and store to the positions column |

| storeSplittedPdf | bool | false | Store one page pdf’s for process it using PdfToImage. |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | text | extracted text |

| pageNumCol | string | pagenum | page number or 0 when splitPage = false |

NOTE: For setting parameters use setParamName method.

Example

from sparkocr.transformers import *

pdfPath = "path to pdf with text layout"

# Read PDF file as binary file

df = spark.read.format("binaryFile").load(pdfPath)

transformer = PdfToText() \

.setInputCol("content") \

.setOutputCol("text") \

.setPageNumCol("pagenum") \

.setSplitPage(True)

data = transformer.transform(df)

data.select("pagenum", "text").show()

import com.johnsnowlabs.ocr.transformers.PdfToText

val pdfPath = "path to pdf with text layout"

// Read PDF file as binary file

val df = spark.read.format("binaryFile").load(pdfPath)

val transformer = new PdfToText()

.setInputCol("content")

.setOutputCol("text")

.setPageNumCol("pagenum")

.setSplitPage(true)

val data = transformer.transform(df)

data.select("pagenum", "text").show()

Output:

+-------+----------------------+

|pagenum|text |

+-------+----------------------+

|0 |This is a page. |

|1 |This is another page. |

|2 |Yet another page. |

+-------+----------------------+

PdfToImage

PdfToImage renders PDF to an image. To be used with scanned PDF documents.

Output dataframe contains total_pages field with total number of pages.

For process pdf with a big number of pages prefer to split pdf by setting splitNumBatch param.

Number of partitions should be equal to number of cores/executors.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | content | binary representation of the PDF document |

| originCol | string | path | path to the original file |

| fallBackCol | string | text | extracted text from previous method for detect if need to run transformer as fallBack |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| splitPage | bool | true | whether it needed to split document to pages |

| minSizeBeforeFallback | int | 10 | minimal count of characters to extract to decide, that the document is the PDF with text layout |

| imageType | ImageType | ImageType.TYPE_BYTE_GRAY |

type of the image |

| resolution | int | 300 | Output image resolution in dpi |

| keepInput | boolean | false | Keep input column in dataframe. By default it is dropping. |

| partitionNum | int | 0 | Number of Spark RDD partitions (0 value - without repartition) |

| binarization | boolean | false | Enable/Disable binarization image after extract image. |

| binarizationParams | Array[String] | null | Array of Binarization params in key=value format. |

| splitNumBatch | int | 0 | Number of partitions or size of partitions, related to the splitting strategy. |

| partitionNumAfterSplit | int | 0 | Number of Spark RDD partitions after splitting pdf document (0 value - without repartition). |

| splittingStategy | SplittingStrategy | SplittingStrategy.FIXED_SIZE_OF_PARTITION |

Controls how a single document is split into a number of partitions each containing a number of pages from the original document. This is useful to process documents with high page count. It can be one of {FIXED_SIZE_OF_PARTITION, FIXED_NUMBER_OF_PARTITIONS}, when FIXED_SIZE_OF_PARTITION is used, splitNumBatch represents the size of each partition, and when FIXED_NUMBER_OF_PARTITIONS is used, splitNumBatch represents the number of partitions. |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | image | extracted image struct (Image schema) |

| pageNumCol | string | pagenum | page number or 0 when splitPage = false |

Example:

from sparkocr.transformers import *

pdfPath = "path to pdf"

# Read PDF file as binary file

df = spark.read.format("binaryFile").load(pdfPath)

pdfToImage = PdfToImage() \

.setInputCol("content") \

.setOutputCol("text") \

.setPageNumCol("pagenum") \

.setSplitPage(True)

data = pdfToImage.transform(df)

data.select("pagenum", "text").show()

import com.johnsnowlabs.ocr.transformers.PdfToImage

val pdfPath = "path to pdf"

// Read PDF file as binary file

val df = spark.read.format("binaryFile").load(pdfPath)

val pdfToImage = new PdfToImage()

.setInputCol("content")

.setOutputCol("text")

.setPageNumCol("pagenum")

.setSplitPage(true)

val data = pdfToImage.transform(df)

data.select("pagenum", "text").show()

ImageToPdf

ImageToPdf transform image to Pdf document.

If dataframe contains few records for same origin path, it groups image by origin

column and create multipage PDF document.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | image | image struct (Image schema) |

| originCol | string | path | path to the original file |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | content | binary representation of the PDF document |

Example:

Read images and store them as single page PDF documents.

from sparkocr.transformers import *

pdfPath = "path to pdf"

# Read PDF file as binary file

df = spark.read.format("binaryFile").load(pdfPath)

# Define transformer for convert to Image struct

binaryToImage = BinaryToImage() \

.setInputCol("content") \

.setOutputCol("image")

# Define transformer for store to PDF

imageToPdf = ImageToPdf() \

.setInputCol("image") \

.setOutputCol("content")

# Call transformers

image_df = binaryToImage.transform(df)

pdf_df = pdfToImage.transform(image_df)

pdf_df.select("content").show()

import com.johnsnowlabs.ocr.transformers._

val imagePath = "path to image"

// Read image file as binary file

val df = spark.read.format("binaryFile").load(imagePath)

// Define transformer for convert to Image struct

val binaryToImage = new BinaryToImage()

.setInputCol("content")

.setOutputCol("image")

// Define transformer for store to PDF

val imageToPdf = new ImageToPdf()

.setInputCol("image")

.setOutputCol("content")

// Call transformers

val image_df = binaryToImage.transform(df)

val pdf_df = pdfToImage.transform(image_df)

pdf_df.select("content").show()

TextToPdf

TextToPdf renders ocr results to PDF document as text layout. Each symbol will render to the same position

with the same font size as in original image or PDF.

If dataframe contains few records for same origin path, it groups image by origin

column and create multipage PDF document.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | positions | column with positions struct |

| inputImage | string | image | image struct (Image schema) |

| inputText | string | text | column name with recognized text |

| originCol | string | path | path to the original file |

| inputContent | string | content | column name with binary representation of original PDF file |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | binary representation of the PDF document |

Example:

Read PDF document, run OCR and render results to PDF document.

from sparkocr.transformers import *

pdfPath = "path to pdf"

# Read PDF file as binary file

df = spark.read.format("binaryFile").load(pdfPath)

pdf_to_image = PdfToImage() \

.setInputCol("content") \

.setOutputCol("image_raw")

binarizer = ImageBinarizer() \

.setInputCol("image_raw") \

.setOutputCol("image") \

.setThreshold(130)

ocr = ImageToText() \

.setInputCol("image") \

.setOutputCol("text") \

.setIgnoreResolution(False) \

.setPageSegMode(PageSegmentationMode.SPARSE_TEXT) \

.setConfidenceThreshold(60)

textToPdf = TextToPdf() \

.setInputCol("positions") \

.setInputImage("image") \

.setOutputCol("pdf")

pipeline = PipelineModel(stages=[

pdf_to_image,

binarizer,

ocr,

textToPdf

])

result = pipeline.transform(df).collect()

# Store to file for debug

with open("test.pdf", "wb") as file:

file.write(result[0].pdf)

import org.apache.spark.ml.Pipeline

import com.johnsnowlabs.ocr.transformers._

val pdfPath = "path to pdf"

// Read PDF file as binary file

val df = spark.read.format("binaryFile").load(pdfPath)

val pdfToImage = new PdfToImage()

.setInputCol("content")

.setOutputCol("image_raw")

.setResolution(400)

val binarizer = new ImageBinarizer()

.setInputCol("image_raw")

.setOutputCol("image")

.setThreshold(130)

val ocr = new ImageToText()

.setInputCol("image")

.setOutputCol("text")

.setIgnoreResolution(false)

.setPageSegMode(PageSegmentationMode.SPARSE_TEXT)

.setConfidenceThreshold(60)

val textToPdf = new TextToPdf()

.setInputCol("positions")

.setInputImage("image")

.setOutputCol("pdf")

val pipeline = new Pipeline()

pipeline.setStages(Array(

pdfToImage,

binarizer,

ocr,

textToPdf

))

val modelPipeline = pipeline.fit(df)

val pdf = modelPipeline.transform(df)

val pdfContent = pdf.select("pdf").collect().head.getAs[Array[Byte]](0)

// store to file

val tmpFile = Files.createTempFile(suffix=".pdf").toAbsolutePath.toString

val fos = new FileOutputStream(tmpFile)

fos.write(pdfContent)

fos.close()

println(tmpFile)

PdfAssembler

PdfAssembler group single page PDF documents by the filename and assemble

muliplepage PDF document.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | page_pdf | binary representation of the PDF document |

| originCol | string | path | path to the original file |

| pageNumCol | string | pagenum | for compatibility with another transformers |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | binary representation of the PDF document |

Example:

from pyspark.ml import PipelineModel

from sparkocr.transformers import *

pdfPath = "path to pdf"

# Read PDF file as binary file

df = spark.read.format("binaryFile").load(pdfPath)

pdf_to_image = PdfToImage() \

.setInputCol("content") \

.setOutputCol("image") \

.setKeepInput(True)

# Run OCR and render results to PDF

ocr = ImageToTextPdf() \

.setInputCol("image") \

.setOutputCol("pdf_page")

# Assemble multipage PDF

pdf_assembler = PdfAssembler() \

.setInputCol("pdf_page") \

.setOutputCol("pdf")

pipeline = PipelineModel(stages=[

pdf_to_image,

ocr,

pdf_assembler

])

pdf = pipeline.transform(df)

pdfContent = pdf.select("pdf").collect().head.getAs[Array[Byte]](0)

# store pdf to file

with open("test.pdf", "wb") as file:

file.write(pdfContent[0].pdf)

import java.io.FileOutputStream

import java.nio.file.Files

import com.johnsnowlabs.ocr.transformers._

val pdfPath = "path to pdf"

// Read PDF file as binary file

val df = spark.read.format("binaryFile").load(pdfPath)

val pdf_to_image = new PdfToImage()

.setInputCol("content")

.setOutputCol("image")

.setKeepInput(True)

// Run OCR and render results to PDF

val ocr = new ImageToTextPdf()

.setInputCol("image")

.setOutputCol("pdf_page")

// Assemble multipage PDF

val pdf_assembler = new PdfAssembler()

.setInputCol("pdf_page")

.setOutputCol("pdf")

// Create pipeline

val pipeline = new Pipeline()

.setStages(Array(

pdf_to_image,

ocr,

pdf_assembler

))

val pdf = pipeline.fit(df).transform(df)

val pdfContent = pdf.select("pdf").collect().head.getAs[Array[Byte]](0)

// store to pdf file

val tmpFile = Files.createTempFile("with_regions_", s".pdf").toAbsolutePath.toString

val fos = new FileOutputStream(tmpFile)

fos.write(pdfContent)

fos.close()

println(tmpFile)

PdfDrawRegions

PdfDrawRegions transformer for drawing regions to Pdf document.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | content | binary representation of the PDF document |

| originCol | string | path | path to the original file |

| inputRegionsCol | string | region | input column which contain regions |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| lineWidth | integer | 1 | line width for draw regions |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | pdf_regions | binary representation of the PDF document |

Example:

from pyspark.ml import Pipeline

from sparkocr.transformers import *

from sparknlp.annotator import *

from sparknlp.base import *

pdfPath = "path to pdf"

# Read PDF file as binary file

df = spark.read.format("binaryFile").load(pdfPath)

pdf_to_text = PdfToText() \

.setInputCol("content") \

.setOutputCol("text") \

.setPageNumCol("page") \

.setSplitPage(False)

document_assembler = DocumentAssembler() \

.setInputCol("text") \

.setOutputCol("document")

sentence_detector = SentenceDetector() \

.setInputCols(["document"]) \

.setOutputCol("sentence")

tokenizer = Tokenizer() \

.setInputCols(["sentence"]) \

.setOutputCol("token")

entity_extractor = TextMatcher() \

.setInputCols("sentence", "token") \

.setEntities("./sparkocr/resources/test-chunks.txt", ReadAs.TEXT) \

.setOutputCol("entity")

position_finder = PositionFinder() \

.setInputCols("entity") \

.setOutputCol("coordinates") \

.setPageMatrixCol("positions") \

.setMatchingWindow(10) \

.setPadding(2)

draw = PdfDrawRegions() \

.setInputRegionsCol("coordinates") \

.setOutputCol("pdf_with_regions") \

.setInputCol("content") \

.setLineWidth(1)

pipeline = Pipeline(stages=[

pdf_to_text,

document_assembler,

sentence_detector,

tokenizer,

entity_extractor,

position_finder,

draw

])

pdfWithRegions = pipeline.fit(df).transform(df)

pdfContent = pdfWithRegions.select("pdf_regions").collect().head.getAs[Array[Byte]](0)

# store to pdf to tmp file

with open("test.pdf", "wb") as file:

file.write(pdfContent[0].pdf_regions)

import java.io.FileOutputStream

import java.nio.file.Files

import com.johnsnowlabs.ocr.transformers._

import com.johnsnowlabs.nlp.{DocumentAssembler, SparkAccessor}

import com.johnsnowlabs.nlp.annotators._

import com.johnsnowlabs.nlp.util.io.ReadAs

val pdfPath = "path to pdf"

// Read PDF file as binary file

val df = spark.read.format("binaryFile").load(pdfPath)

val pdfToText = new PdfToText()

.setInputCol("content")

.setOutputCol("text")

.setSplitPage(false)

val documentAssembler = new DocumentAssembler()

.setInputCol("text")

.setOutputCol("document")

val sentenceDetector = new SentenceDetector()

.setInputCols(Array("document"))

.setOutputCol("sentence")

val tokenizer = new Tokenizer()

.setInputCols(Array("sentence"))

.setOutputCol("token")

val entityExtractor = new TextMatcher()

.setInputCols("sentence", "token")

.setEntities("test-chunks.txt", ReadAs.TEXT)

.setOutputCol("entity")

val positionFinder = new PositionFinder()

.setInputCols("entity")

.setOutputCol("coordinates")

.setPageMatrixCol("positions")

.setMatchingWindow(10)

.setPadding(2)

val pdfDrawRegions = new PdfDrawRegions()

.setInputRegionsCol("coordinates")

// Create pipeline

val pipeline = new Pipeline()

.setStages(Array(

pdfToText,

documentAssembler,

sentenceDetector,

tokenizer,

entityExtractor,

positionFinder,

pdfDrawRegions

))

val pdfWithRegions = pipeline.fit(df).transform(df)

val pdfContent = pdfWithRegions.select("pdf_regions").collect().head.getAs[Array[Byte]](0)

// store to pdf to tmp file

val tmpFile = Files.createTempFile("with_regions_", s".pdf").toAbsolutePath.toString

val fos = new FileOutputStream(tmpFile)

fos.write(pdfContent)

fos.close()

println(tmpFile)

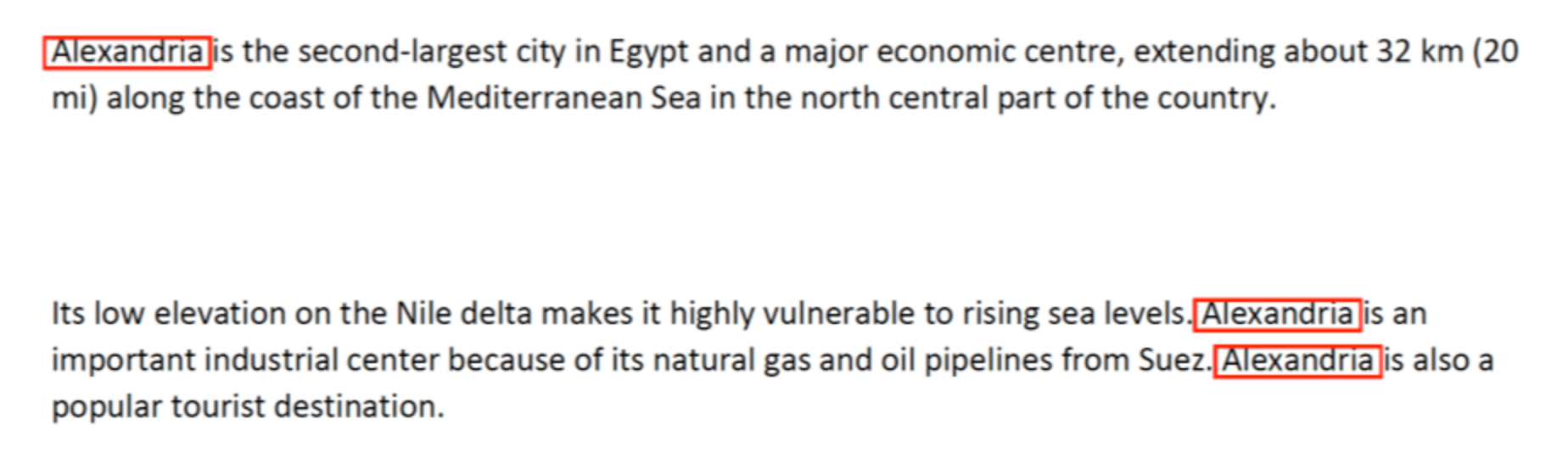

Results:

PdfToTextTable

Extract tables from Pdf document page. Input is a column with binary representation of PDF document. As output generate column with tables and tables text chunks coordinates (rows/cols).

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | text | binary representation of the PDF document |

| originCol | string | path | path to the original file |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| pageIndex | integer | -1 | Page index to extract Tables. |

| guess | bool | false | A logical indicating whether to guess the locations of tables on each page. |

| method | string | decide | Identifying the prefered method of table extraction: basic, spreadsheet. |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | TableContainer | tables | Extracted tables |

Example:

from pyspark.ml import Pipeline

from sparkocr.transformers import *

from sparknlp.annotator import *

from sparknlp.base import *

pdfPath = "path to pdf"

# Read PDF file as binary file

df = spark.read.format("binaryFile").load(pdfPath)

pdf_to_text_table = PdfToTextTable()

pdf_to_text_table.setInputCol("content")

pdf_to_text_table.setOutputCol("table")

pdf_to_text_table.setPageIndex(1)

pdf_to_text_table.setMethod("basic")

table = pdf_to_text_table.transform(df)

# Show first row

table.select(table["table.chunks"].getItem(1)["chunkText"]).show(1, False)

import java.io.FileOutputStream

import java.nio.file.Files

import com.johnsnowlabs.ocr.transformers._

import com.johnsnowlabs.nlp.{DocumentAssembler, SparkAccessor}

import com.johnsnowlabs.nlp.annotators._

import com.johnsnowlabs.nlp.util.io.ReadAs

val pdfPath = "path to pdf"

// Read PDF file as binary file

val df = spark.read.format("binaryFile").load(pdfPath)

val pdfToTextTable = new PdfToTextTable()

.setInputCol("content")

.setOutputCol("table")

.pdf_to_text_table.setPageIndex(1)

.pdf_to_text_table.setMethod("basic")

table = pdfToTextTable.transform(df)

// Show first row

table.select(table["table.chunks"].getItem(1)["chunkText"]).show(1, False)

Output:

+------------------------------------------------------------------+

|table.chunks AS chunks#760[1].chunkText |

+------------------------------------------------------------------+

|[Mazda RX4, 21.0, 6, , 160.0, 110, 3.90, 2.620, 16.46, 0, 1, 4, 4]|

+------------------------------------------------------------------+

DOCX processing

Next section describes the transformers that deal with DOCX files with the purpose of extracting text and table data from it.

DocToText

DocToText extracts text from the DOCX document.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | text | binary representation of the DOCX document |

| originCol | string | path | path to the original file |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | text | extracted text |

| pageNumCol | string | pagenum | for compatibility with another transformers |

NOTE: For setting parameters use setParamName method.

Example

from sparkocr.transformers import *

docPath = "path to docx with text layout"

# Read DOCX file as binary file

df = spark.read.format("binaryFile").load(docPath)

transformer = DocToText() \

.setInputCol("content") \

.setOutputCol("text")

data = transformer.transform(df)

data.select("pagenum", "text").show()

import com.johnsnowlabs.ocr.transformers.DocToText

val docPath = "path to docx with text layout"

// Read DOCX file as binary file

val df = spark.read.format("binaryFile").load(docPath)

val transformer = new DocToText()

.setInputCol("content")

.setOutputCol("text")

val data = transformer.transform(df)

data.select("pagenum", "text").show()

DocToTextTable

DocToTextTable extracts table data from the DOCX documents.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | text | binary representation of the PDF document |

| originCol | string | path | path to the original file |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | TableContainer | tables | Extracted tables |

NOTE: For setting parameters use setParamName method.

Example

from sparkocr.transformers import *

docPath = "path to docx with text layout"

# Read DOCX file as binary file

df = spark.read.format("binaryFile").load(docPath)

transformer = DocToTextTable() \

.setInputCol("content") \

.setOutputCol("tables")

data = transformer.transform(df)

data.select("tables").show()

import com.johnsnowlabs.ocr.transformers.DocToTextTable

val docPath = "path to docx with text layout"

// Read DOCX file as binary file

val df = spark.read.format("binaryFile").load(docPath)

val transformer = new DocToTextTable()

.setInputCol("content")

.setOutputCol("tables")

val data = transformer.transform(df)

data.select("tables").show()

DocToPdf

DocToPdf convert DOCX document to PDF document.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | text | binary representation of the DOCX document |

| originCol | string | path | path to the original file |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | text | binary representation of the PDF document |

NOTE: For setting parameters use setParamName method.

Example

from sparkocr.transformers import *

docPath = "path to docx with text layout"

# Read DOCX file as binary file

df = spark.read.format("binaryFile").load(docPath)

transformer = DocToPdf() \

.setInputCol("content") \

.setOutputCol("pdf")

data = transformer.transform(df)

data.select("pdf").show()

import com.johnsnowlabs.ocr.transformers.DocToPdf

val docPath = "path to docx with text layout"

// Read DOCX file as binary file

val df = spark.read.format("binaryFile").load(docPath)

val transformer = new DocToPdf()

.setInputCol("content")

.setOutputCol("pdf")

val data = transformer.transform(df)

data.select("pdf").show()

PptToTextTable

PptToTextTable extracts table data from the PPT and PPTX documents.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | text | binary representation of the PPT document |

| originCol | string | path | path to the original file |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | TableContainer | tables | Extracted tables |

NOTE: For setting parameters use setParamName method.

Example

from sparkocr.transformers import *

docPath = "path to docx with text layout"

# Read PPT file as binary file

df = spark.read.format("binaryFile").load(docPath)

transformer = PptToTextTable() \

.setInputCol("content") \

.setOutputCol("tables")

data = transformer.transform(df)

data.select("tables").show()

import com.johnsnowlabs.ocr.transformers.PptToTextTable

val docPath = "path to docx with text layout"

// Read PPT file as binary file

val df = spark.read.format("binaryFile").load(docPath)

val transformer = new PptToTextTable()

.setInputCol("content")

.setOutputCol("tables")

val data = transformer.transform(df)

data.select("tables").show()

PptToPdf

PptToPdf convert PPT and PPTX documents to PDF document.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | text | binary representation of the PPT document |

| originCol | string | path | path to the original file |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | text | binary representation of the PDF document |

NOTE: For setting parameters use setParamName method.

Example

from sparkocr.transformers import *

docPath = "path to PPT with text layout"

# Read DOCX file as binary file

df = spark.read.format("binaryFile").load(docPath)

transformer = PptToPdf() \

.setInputCol("content") \

.setOutputCol("pdf")

data = transformer.transform(df)

data.select("pdf").show()

import com.johnsnowlabs.ocr.transformers.PptToPdf

val docPath = "path to docx with text layout"

// Read PPT file as binary file

val df = spark.read.format("binaryFile").load(docPath)

val transformer = new PptToPdf()

.setInputCol("content")

.setOutputCol("pdf")

val data = transformer.transform(df)

data.select("pdf").show()

Dicom processing

DicomToImage

DicomToImage transforms dicom object (loaded as binary file) to image struct.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | content | binary dicom object |

| originCol | string | path | path to the original file |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | image | extracted image struct (Image schema) |

| pageNumCol | integer | pagenum | page (image) number begin from 0 |

| metadataCol | string | metadata | Output column name for dicom metatdata ( json formatted ) |

Scala example:

from sparkocr.transformers import *

dicomPath = "path to dicom files"

# Read dicom file as binary file

df = spark.read.format("binaryFile").load(dicomPath)

dicomToImage = DicomToImage() \

.setInputCol("content") \

.setOutputCol("image") \

.setMetadataCol("meta")

data = dicomToImage.transform(df)

data.select("image", "pagenum", "meta").show()

import com.johnsnowlabs.ocr.transformers.DicomToImage

val dicomPath = "path to dicom files"

// Read dicom file as binary file

val df = spark.read.format("binaryFile").load(dicomPath)

val dicomToImage = new DicomToImage()

.setInputCol("content")

.setOutputCol("image")

.setMetadataCol("meta")

val data = dicomToImage.transform(df)

data.select("image", "pagenum", "meta").show()

ImageToDicom

ImageToDicom transforms image to Dicom document.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | image | image struct (Image schema) |

| originCol | string | path | path to the original file |

| metadataCol | string | metadata | dicom metatdata ( json formatted ) |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | dicom | binary dicom object |

Scala example:

from sparkocr.transformers import *

imagePath = "path to image file"

# Read image file as binary file

df = spark.read.format("binaryFile").load(imagePath)

binaryToImage = BinaryToImage() \

.setInputCol("content") \

.setOutputCol("image")

image_df = binaryToImage.transform(df)

imageToDicom = ImageToDicom() \

.setInputCol("image") \

.setOutputCol("dicom")

data = imageToDicom.transform(image_df)

data.select("dicom").show()

import com.johnsnowlabs.ocr.transformers.ImageToDicom

val imagePath = "path to image file"

// Read image file as binary file

val df = spark.read

.format("binaryFile")

.load(imagePath)

.asImage("image")

val imageToDicom = new ImageToDicom()

.setInputCol("image")

.setOutputCol("dicom")

val data = imageToDicom.transform(df)

data.select("dicom").show()

DicomToImageV2

Used to convert the dicom frames into images.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | content | Specifies the column containing the DICOM file path or buffer input |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| KeepInput | boolean | False | Indicates whether input columns should be retained in the resulting DataFrame |

| PageNumCol | string | - | Specifies the column containing the page number in the resulting DataFrame |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | image | Specifies the column containing the images in the resulting DataFrame |

Example:

from sparkocr.transformers import DicomToImageV2

from sparkocr.utils import display_images

df = spark.read.format("binaryFile").load(path_to_dicom_file)

dicom_to_image_v2 = DicomToImageV2() \

.setInputCols(["path"]) \

.setKeepInput(True) \

.setOutputCol("image_raw") \

.setPageNumCol("pagenum")

result = dicom_to_image_v2.transform(df).cache()

display_images(result, "image_raw")

import com.johnsnowlabs.ocr.transformers.DicomToImageV2

import com.johnsnowlabs.ocr.utils.display_images

val df = spark.read.format("binaryFile").load(path_to_dicom_file)

val dicom_to_image_v2 = DicomToImageV2()

.setInputCols(["path"])

.setKeepInput(True)

.setOutputCol("image_raw")

.setPageNumCol("pagenum")

val result = dicom_to_image_v2.transform(df).cache()

val display_images(result, "image_raw")

DicomToImageV3

Used to convert the dicom frames into images. Faster than DicomToImageV2.

Input Columns

| Param name | Type | Default | Description |

|---|---|---|---|

| keepInput | boolean | False | Indicates whether input columns should be retained in the resulting DataFrame |

| pageNumCol | string | - | Specifies the column containing the page number in the resulting DataFrame |

| scale | float | 1 | The scale factor by which the image will be rescaled. Default is ‘1’ (no scaling). |

| frameLimit | int | 0 | Limits the number of frames extracted; set to 0 to extract all frames. |

| compressionThreshold | int | 1 | File size threshold in MB that triggers compression when compressionMode is "auto". |

| compressionMode | string ("auto" | "enabled" | "disabled") |

disabled | Compression behavior: "enabled" compresses every file using compressionQuality; "disabled" never compresses; "auto" compresses only if file size ≥ compressionThreshold. |

| compressionQuality | int (1–95) | 85 | JPEG quality used when compressing (higher = better quality/larger size). |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| KeepInput | boolean | False | Indicates whether input columns should be retained in the resulting DataFrame |

| PageNumCol | string | - | Specifies the column containing the page number in the resulting DataFrame |

| Scale | boolean | 0 | The desired width of the input image, to which the image will be resized |

| FrameLimit | int | 0 | Limits the number of frames extracted; set to 0 to extract all frames. |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | - | Specifies the column in the output DataFrame that contains the raw images |

Example:

from sparkocr.transformers import DicomSplitter, DicomToImageV3

from sparkocr.enums import SplittingStrategy

from pyspark.ml import PipelineModel

df = spark.read.format("binaryFile").load(dicom_file_path).drop("content")

dicom_splitter = DicomSplitter() \

.setInputCol("path") \

.setOutputCol("frames") \

.setKeepInput(True) \

.setSplitNumBatch(2) \

.setPartitionNum(10) \

.setSplittingStategy(SplittingStrategy.FIXED_SIZE_OF_PARTITION)

dicom_to_image = DicomToImageV3() \

.setInputCols(["path", "frames"]) \

.setOutputCol("image_raw") \

.setKeepInput(False) \

.setPageNumCol("page_number") \

.setScale(1) \

.setFrameLimit(0)

pipeline = PipelineModel(stages=[

dicom_splitter,

dicom_to_image

])

result = pipeline.transform(df).cache()

import com.johnsnowlabs.ocr.transformers.DicomSplitter

import com.johnsnowlabs.ocr.transformers.DicomToImageV3

import com.johnsnowlabs.ocr.enums.SplittingStrategy

import com.pyspark.ml.PipelineModel

val df = spark.read.format("binaryFile").load(dicom_file_path).drop("content")

val dicom_splitter = DicomSplitter()

.setInputCol("path")

.setOutputCol("frames")

.setKeepInput(True)

.setSplitNumBatch(2)

.setPartitionNum(10)

.setSplittingStategy(SplittingStrategy.FIXED_SIZE_OF_PARTITION)

val dicom_to_image = DicomToImageV3()

.setInputCols(Array("path", "frames"))

.setOutputCol("image_raw")

.setKeepInput(False)

.setPageNumCol("page_number")

.setScale(1)

.setFrameLimit(0)

val pipeline = new PipelineModel().setStages(Array(

dicom_splitter,

dicom_to_image

))

val result = pipeline.transform(df).cache()

DicomDrawRegions

DicomDrawRegions draw regions to Dicom Image.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | content | Binary dicom object |

| inputRegionsCol | string | regions | Detected Array[Coordinates] from PositionFinder |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| scaleFactor | float | 1.0 | Scaling factor for regions. |

| rotated | boolean | False | Enable/Disable support for rotated rectangles |

| keepInput | boolean | False | Keep the original input column |

| compression | string | RLELossless | Compression type |

| forceCompress | boolean | False | True - Force compress image. False - compress only if original image was compressed |

| aggCols | Array[string] | [‘path’] | Sets the columns to be included in aggregation. These columns are preserved in the output DataFrame after transformations |

| forceOutput | boolean | False | Enables decompressed output for DICOM files when compression is unsupported or format constraints prevent re-compression |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | image | Modified Dicom file data |

Example:

from sparkocr.transformers import *

dicomPath = "path to dicom files"

# Read dicom file as binary file

df = spark.read.format("binaryFile").load(dicomPath)

dicomToImage = DicomToImage() \

.setInputCol("content") \

.setOutputCol("image") \

.setMetadataCol("meta")

position_finder = PositionFinder() \

# Usually chunks are created using the deidentification_nlp_pipeline

.setInputCols("ner_chunk") \

.setOutputCol("coordinates") \

.setPageMatrixCol("positions") \

.setPadding(0)

draw_regions = DicomDrawRegions() \

.setInputCol("content") \

.setInputRegionsCol("coordinates") \

.setOutputCol("dicom") \

.setKeepInput(True) \

.setScaleFactor(1/3.0) \

.setAggCols(["path", "content"])

data = dicomToImage.transform(df)

data.select("content", "dicom").show()

// Note: DicomDrawRegions class is not available in the Scala API

// This class is used in the Python API for DICOM image manipulation and transformation.

DicomSplitter

DicomSplitter splits the dicom file into several frames.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | content | Binary dicom object |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| PartitionNum | Int | 5 | The number of partitions in the output DataFrame |

| SplitNumBatch | Int | 2 | The number of frames per row for the input DICOM file |

| KeepInput | boolean | False | Indicates whether the input columns should be included in the result DataFrame |

| SplittingStrategy | enum | FixedSizePartition | Defines the partitioning strategy, either a fixed number of partitions (evenly dividing data across a set number of partitions) or a fixed size of partitions (each partition containing a specified number of elements) |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | image | The output column name containing the DICOM file’s image frames |

Example:

from sparkocr.transformers import DicomSplitter

from sparkocr.enums import SplittingStrategy

df = spark.read.format("binaryFile").load(dicom_file_path).drop("content")

dicom_splitter = DicomSplitter() \

.setInputCol("path") \

.setOutputCol("frames") \

.setKeepInput(True) \

.setSplitNumBatch(2) \

.setPartitionNum(10) \

.setSplittingStategy(SplittingStrategy.FIXED_SIZE_OF_PARTITION)

result = dicom_splitter.transform(df).cache()

import com.johnsnowlabs.ocr.transformers.DicomSplitter

import com.johnsnowlabs.ocr.enums.SplittingStrategy

val df = spark.read.format("binaryFile").load(dicom_file_path).drop("content")

val dicom_splitter = DicomSplitter()

.setInputCol("path")

.setOutputCol("frames")

.setKeepInput(True)

.setSplitNumBatch(2)

.setPartitionNum(10)

.setSplittingStategy(SplittingStrategy.FIXED_SIZE_OF_PARTITION)

val result = dicom_splitter.transform(df).cache()

DicomMetadataDeidentifier

Remove Protected Health Information from dicom tags.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCols | array[string] | array[content] | Expects the path or buffer for the DICOM file, along with optional metadata. |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| KeepInput | boolean | False | Indicates whether the input columns should be included in the result DataFrame |

| PlaceHolderText | string | anonymous | Specifies the placeholder text used to deidentify sensitive PHI (Protected Health Information) in DICOM tags |

| RemovePrivateTags | boolean | False | Indicates whether private tags should be removed from the DICOM file |

| BlackList | array[string] | - | Contains a list of specific DICOM tags to be deidentified; only tags in this list will be processed for deidentification if provided |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | - | Column that stores the final DICOM file after de-identifying DICOM tags |

Example:

from sparkocr.transformers import DicomMetadataDeidentifier

df = spark.read.format("binaryFile").load(dicom_file_path)

dicom_deidentifier = DicomMetadataDeidentifier() \

.setInputCols(["path"]) \

.setOutputCol("dicom_deidentified") \

.setKeepInput(True) \

.setPlaceholderText("***") \

.setRemovePrivateTags(True)

result = dicom_deidentifier.transform(df).cache()

result.write.format("binaryFormat") \

.option("type", "dicom") \

.option("field", "dicom_deidentified") \

.option("extension", "dcm") \

.option("prefix", "de-id-ybr") \

.mode("overwrite") \

.save("/content/dicom")

import com.johnsnowlabs.ocr.transformers.DicomMetadataDeidentifier

val df = spark.read.format("binaryFile").load(dicom_file_path)

val dicom_deidentifier = DicomMetadataDeidentifier()

.setInputCols(Array("path"))

.setOutputCol("dicom_deidentified")

.setKeepInput(True)

.setPlaceholderText("***")

.setRemovePrivateTags(True)

val result = dicom_deidentifier.transform(df).cache()

val result.write.format("binaryFormat")

.option("type", "dicom")

.option("field", "dicom_deidentified")

.option("extension", "dcm")

.option("prefix", "de-id-ybr")

.mode("overwrite")

.save("/content/dicom")

DicomDeidentifier

Uses metadata and detected text positions to locate and de-identify sensitive information in both pixel data and metadata.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCols | array[string] | array[content] | Accepts the positions and dicom metadata as input. |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| KeepInput | boolean | False | Indicates whether the input columns should be included in the result DataFrame |

| BlackList | array[string] | anonymous | Specifies a list of words to be removed from pixels. |

| BlackListFile | string | False | Path to CSV file containing a list of words to be removed from pixels. |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | - | Specifies the column containing the dicom in the resulting DataFrame. |

Example:

from sparkocr.transformers import DicomToMetadata, DicomSplitter, DicomToImageV3, ImageTextDetector, ImageToTextV3, DicomDeidentifier

from sparkocr.enums import OcrOutputFormat, SplittingStrategy

from pyspark.ml import PipelineModel

df = spark.read.format("binaryFile").load(path_to_dicom_file).drop("content")

dicom_to_meta = DicomToMetadata() \

.setInputCol("path") \

.setOutputCol("metadata") \

.setKeepInput(True)

dicom_splitter = DicomSplitter() \

.setInputCol("path") \

.setOutputCol("frames") \

.setKeepInput(True) \

.setSplitNumBatch(2) \

.setPartitionNum(10) \

.setSplittingStategy(SplittingStrategy.FIXED_SIZE_OF_PARTITION)

dicom_to_image = DicomToImageV3() \

.setInputCols(["path", "frames"]) \

.setOutputCol("image_raw") \

.setKeepInput(True)

text_detector = ImageTextDetector.pretrained("image_text_detector_opt", "en", "clinical/ocr") \

.setInputCol("image_raw") \

.setOutputCol("text_regions") \

.setSizeThreshold(10) \

.setScoreThreshold(0.9) \

.setLinkThreshold(0.4) \

.setTextThreshold(0.2)

ocr = ImageToTextV3() \

.setInputCols(["image_raw", "text_regions"]) \

.setOutputCol("text")

dicom_deidentifier = DicomDeidentifier() \

.setInputCols(["positions","metadata"]) \

.setKeepInput(True) \

.setOutputCol("entity") \

.setBlackList(["acc"])

pipeline = PipelineModel(stages=[

dicom_to_meta,

dicom_splitter,

dicom_to_image,

text_detector,

ocr,

dicom_deidentifier

])

result = pipeline.transform(df).cache()

import org.apache.spark.ml.Pipeline

import com.johnsnowlabs.ocr.transformers.DicomToMetadata

import com.johnsnowlabs.ocr.transformers.DicomSplitter

import com.johnsnowlabs.ocr.transformers.DicomToImageV3

import com.johnsnowlabs.ocr.transformers.ImageTextDetector

import com.johnsnowlabs.ocr.transformers.ImageToTextV3

import com.johnsnowlabs.ocr.transformers.DicomDeidentifier

import com.johnsnowlabs.ocr.enums.OcrOutputFormat

import com.johnsnowlabs.ocr.enums.SplittingStrategy

val df = spark.read.format("binaryFile").load(path_to_dicom_file).drop("content")

val dicom_to_meta = DicomToMetadata()

.setInputCol("path")

.setOutputCol("metadata")

.setKeepInput(True)

val dicom_splitter = DicomSplitter()

.setInputCol("path")

.setOutputCol("frames")

.setKeepInput(True)

.setSplitNumBatch(2)

.setPartitionNum(10)

.setSplittingStategy(SplittingStrategy.FIXED_SIZE_OF_PARTITION)

val dicom_to_image = DicomToImageV3()

.setInputCols(Array("path", "frames"))

.setOutputCol("image_raw")

.setKeepInput(True)

val text_detector = ImageTextDetector.pretrained("image_text_detector_opt", "en", "clinical/ocr")

.setInputCol(Array("image_raw"))

.setOutputCol("text_regions")

.setSizeThreshold(10)

.setScoreThreshold(0.9)

.setLinkThreshold(0.4)

.setTextThreshold(0.2)

val ocr = ImageToTextV3()

.setInputCols(Array("image_raw", "text_regions"))

.setOutputCol("text")

val dicom_deidentifier = DicomDeidentifier()

.setInputCols(Array("positions","metadata"))

.setKeepInput(True)

.setOutputCol("entity")

.setBlackList(Array("acc"))

pipeline = new PipelineModel().setStages(Array(

dicom_to_meta,

dicom_splitter,

dicom_to_image,

text_detector,

ocr,

dicom_deidentifier

))

val result = pipeline.transform(df).cache()

DicomToMetadata

Extract metadata from Dicom files.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | content | Specifies the path or buffer for the DICOM file. |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| KeepInput | boolean | False | Indicates if the DICOM input columns should remain in the final result DataFrame. |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | - | Specify the column name to contain the dicom metadata as output |

Example:

from sparkocr.transformers import DicomToMetadata

dicom_to_meta = DicomToMetadata() \

.setInputCol("path") \

.setOutputCol("metadata") \

.setKeepInput(True)

df_dicom = spark.read.format("binaryFile").load(dicom_file_path)

result = dicom_to_meta.transform(df_dicom).cache()

dicom_metadata = result.select("metadata").collect()[0].asDict()["metadata"]

import com.johnsnowlabs.ocr.transformers.DicomToMetadata

val dicom_to_meta = DicomToMetadata()

.setInputCol("path")

.setOutputCol("metadata")

.setKeepInput(True)

val df_dicom = spark.read.format("binaryFile").load(dicom_file_path)

val result = dicom_to_meta.transform(df_dicom).cache()

val dicom_metadata = result.select("metadata").collect()[0].asDict()Array("metadata")

DicomToPDF

Convert Dicom Files to PDF files.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCols | array[string] | - | Specifies the input path or buffer for the DICOM file. |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| KeepInput | boolean | False | Determines if the original input should be retained in the resulting DataFrame. |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | - | Specifies the column name containing the final PDF file resulting from the conversion. |

Example:

from sparkocr.transformers import DicomToPdf, PdfToImage

from pyspark.ml import PipelineModel

df_dicom = spark.read.format("binaryFile").load(dicom_file_path)

dicom_to_pdf = DicomToPdf() \

.setInputCols(["path"]) \

.setOutputCol("dicom_pdf") \

.setKeepInput(True)

pdf_to_image = PdfToImage() \

.setInputCol("dicom_pdf") \

.setOutputCol("image")

pipeline = PipelineModel(stages=[

dicom_to_pdf,

pdf_to_image

])

result = pipeline.transform(df_dicom).cache()

import com.johnsnowlabs.ocr.transformers.DicomToPdf

import com.johnsnowlabs.ocr.transformers.PdfToImage

import com.pyspark.ml.PipelineModel

df_dicom = spark.read.format("binaryFile").load(dicom_file_path)

val dicom_to_pdf = DicomToPdf()

.setInputCols(Array("path"))

.setOutputCol("dicom_pdf")

.setKeepInput(True)

val pdf_to_image = PdfToImage()

.setInputCol("dicom_pdf")

.setOutputCol("image")

val pipeline = new PipelineModel().setStages(Array(

dicom_to_pdf,

pdf_to_image

))

val result = pipeline.transform(df_dicom).cache()

DicomUpdatePDF

Converts pdf file into dicom as a last step for de-identification process.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | content | Specifies the column containing the DICOM file path or buffer input |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| KeepInput | boolean | False | Indicates whether input columns should be retained in the resulting DataFrame |

| InputPdfCol | string | - | Specifies the column containing the pdf object in the resulting DataFrame. |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | - | Specifies the column containing the dicom in the resulting DataFrame. |

Example:

from sparkocr.transformers import DicomUpdatePdf

dciom_update_pdf = DicomUpdatePdf() \

.setInputCol("path") \

.setInputPdfCol("pdf") \

.setOutputCol("dicom") \

.setKeepInput(True)

result = dciom_update_pdf.transform(df).cache()

result.write.format("binaryFormat") \

.option("type", "dicom") \

.option("field", "dicom") \

.option("extension", "dcm") \

.option("prefix", "de-id-ybr") \

.mode("overwrite") \

.save("/content/dicom")

import com.johnsnowlabs.ocr.transformers.DicomUpdatePdf

val dciom_update_pdf = DicomUpdatePdf()

.setInputCol("path")

.setInputPdfCol("pdf")

.setOutputCol("dicom")

.setKeepInput(True)

val result = dciom_update_pdf.transform(df).cache()

val result.write.format("binaryFormat")

.option("type", "dicom")

.option("field", "dicom")

.option("extension", "dcm")

.option("prefix", "de-id-ybr")

.mode("overwrite")

.save("/content/dicom")

DicomUpdatePDF

Converts pdf file into dicom as a last step for de-identification process.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | content | Specifies the column containing the DICOM file path or buffer input |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| KeepInput | boolean | False | Indicates whether input columns should be retained in the resulting DataFrame |

| InputPdfCol | string | - | Specifies the column containing the pdf object in the resulting DataFrame. |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | - | Specifies the column containing the dicom in the resulting DataFrame. |

Example:

from sparkocr.transformers import DicomUpdatePdf

dciom_update_pdf = DicomUpdatePdf() \

.setInputCol("path") \

.setInputPdfCol("pdf") \

.setOutputCol("dicom") \

.setKeepInput(True)

result = dciom_update_pdf.transform(df).cache()

result.write.format("binaryFormat") \

.option("type", "dicom") \

.option("field", "dicom") \

.option("extension", "dcm") \

.option("prefix", "de-id-ybr") \

.mode("overwrite") \

.save("/content/dicom")

import com.johnsnowlabs.ocr.transformers.DicomUpdatePdf

val dciom_update_pdf = DicomUpdatePdf()

.setInputCol("path")

.setInputPdfCol("pdf")

.setOutputCol("dicom")

.setKeepInput(True)

val result = dciom_update_pdf.transform(df).cache()

val result.write.format("binaryFormat")

.option("type", "dicom")

.option("field", "dicom")

.option("extension", "dcm")

.option("prefix", "de-id-ybr")

.mode("overwrite")

.save("/content/dicom")

Image pre-processing

Next section describes the transformers for image pre-processing: scaling, binarization, skew correction, etc.

BinaryToImage

BinaryToImage transforms image (loaded as binary file) to image struct.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | content | binary representation of the image |

| originCol | string | path | path to the original file |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | image | extracted image struct (Image schema) |

Scala example:

from sparkocr.transformers import *

imagePath = "path to image"

# Read image file as binary file

df = spark.read.format("binaryFile").load(imagePath)

binaryToImage = BinaryToImage() \

.setInputCol("content") \

.setOutputCol("image")

data = binaryToImage.transform(df)

data.select("image").show()

import com.johnsnowlabs.ocr.transformers.BinaryToImage

val imagePath = "path to image"

// Read image file as binary file

val df = spark.read.format("binaryFile").load(imagePath)

val binaryToImage = new BinaryToImage()

.setInputCol("content")

.setOutputCol("image")

val data = binaryToImage.transform(df)

data.select("image").show()

GPUImageTransformer

GPUImageTransformer allows to run image pre-processing operations on GPU.

It supports the following operations:

- Scaling

- Otsu thresholding

- Huang thresholding

- Erosion

- Dilation

GPUImageTransformer allows to add few operations. To add operations you need to call

one of the methods with params:

| Method name | Params | Description |

|---|---|---|

| addScalingTransform | factor | Scale image by scaling factor. |

| addOtsuTransform | The automatic thresholder utilizes the Otsu threshold method. | |

| addHuangTransform | The automatic thresholder utilizes the Huang threshold method. | |

| addDilateTransform | width, height | Computes the local maximum of a pixels rectangular neighborhood. The rectangles size is specified by its half-width and half-height. |

| addErodeTransform | width, height | Computes the local minimum of a pixels rectangular neighborhood. The rectangles size is specified by its half-width and half-height |

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | image | image struct (Image schema) |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| imageType | ImageType | ImageType.TYPE_BYTE_BINARY |

Type of the output image |

| gpuName | string | ”” | GPU device name. |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | transformed_image | image struct (Image schema) |

Example:

from sparkocr.transformers import *

from sparkocr.enums import ImageType

from sparkocr.utils import display_images

imagePath = "path to image"

# Read image file as binary file

df = spark.read \

.format("binaryFile") \

.load(imagePath)

binary_to_image = BinaryToImage() \

.setInputCol("content") \

.setOutputCol("image")

transformer = GPUImageTransformer() \

.setInputCol("image") \

.setOutputCol("transformed_image") \

.addHuangTransform() \

.addScalingTransform(3) \

.addDilateTransform(2, 2) \

.setImageType(ImageType.TYPE_BYTE_BINARY)

pipeline = PipelineModel(stages=[

binary_to_image,

transformer

])

result = pipeline.transform(df)

display_images(result, "transformed_image")

import com.johnsnowlabs.ocr.transformers.GPUImageTransformer

import com.johnsnowlabs.ocr.OcrContext.implicits._

val imagePath = "path to image"

// Read image file as binary file

val df = spark.read

.format("binaryFile")

.load(imagePath)

.asImage("image")

val transformer = new GPUImageTransformer()

.setInputCol("image")

.setOutputCol("transformed_image")

.addHuangTransform()

.addScalingTransform(3)

.addDilateTransform(2, 2)

.setImageType(ImageType.TYPE_BYTE_BINARY)

val data = transformer.transform(df)

data.storeImage("transformed_image")

ImageBinarizer

ImageBinarizer transforms image to binary color schema, based on threshold.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | image | image struct (Image schema) |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| threshold | int | 170 |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | binarized_image | image struct (Image schema) |

Example:

from sparkocr.transformers import *

imagePath = "path to image"

# Read image file as binary file

df = spark.read \

.format("binaryFile") \

.load(imagePath) \

.asImage("image")

binirizer = ImageBinarizer() \

.setInputCol("image") \

.setOutputCol("binary_image") \

.setThreshold(100)

data = binirizer.transform(df)

data.show()

import com.johnsnowlabs.ocr.transformers.ImageBinarizer

import com.johnsnowlabs.ocr.OcrContext.implicits._

val imagePath = "path to image"

// Read image file as binary file

val df = spark.read

.format("binaryFile")

.load(imagePath)

.asImage("image")

val binirizer = new ImageBinarizer()

.setInputCol("image")

.setOutputCol("binary_image")

.setThreshold(100)

val data = binirizer.transform(df)

data.storeImage("binary_image")

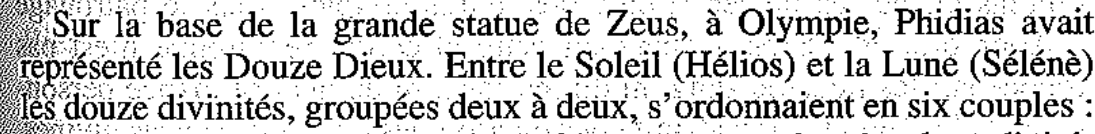

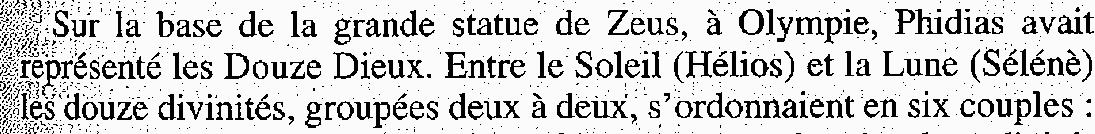

Original image:

Binarized image with 100 threshold:

ImageAdaptiveBinarizer

Supported Methods:

- OTSU. Returns a single intensity threshold that separate pixels into two classes, foreground and background.

- Gaussian local thresholding. Thresholds the image using a locally adaptive threshold that is computed using a local square region centered on each pixel. The threshold is equal to the gaussian weighted sum of the surrounding pixels times the scale.

- Sauvola. Is a Local thresholding technique that are useful for images where the background is not uniform.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | image | image struct (Image schema) |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| width | float | 90 | Width of square region. |

| method | TresholdingMethod | TresholdingMethod.GAUSSIAN |

Method used to determine adaptive threshold. |

| scale | float | 1.1f | Scale factor used to adjust threshold. |

| imageType | ImageType | ImageType.TYPE_BYTE_BINARY |

Type of the output image |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | binarized_image | image struct (Image schema) |

Example:

from pyspark.ml import PipelineModel

from sparkocr.transformers import *

from sparkocr.utils import display_image

imagePath = "path to image"

# Read image file as binary file

df = spark.read

.format("binaryFile")

.load(imagePath)

binary_to_image = BinaryToImage() \

.setInputCol("content") \

.setOutputCol("image")

adaptive_thresholding = ImageAdaptiveBinarizer() \

.setInputCol("image") \

.setOutputCol("binarized_image") \

.setWidth(100) \

.setScale(1.1)

pipeline = PipelineModel(stages=[

binary_to_image,

adaptive_thresholding

])

result = pipeline.transform(df)

for r in result.select("image", "corrected_image").collect():

display_image(r.image)

display_image(r.corrected_image)

import com.johnsnowlabs.ocr.transformers.*

import com.johnsnowlabs.ocr.OcrContext.implicits._

val imagePath = "path to image"

// Read image file as binary file

val df = spark.read

.format("binaryFile")

.load(imagePath)

.asImage("image")

val binirizer = new ImageAdaptiveBinarizer()

.setInputCol("image")

.setOutputCol("binary_image")

.setWidth(100)

.setScale(1.1)

val data = binirizer.transform(df)

data.storeImage("binary_image")

ImageAdaptiveThresholding

Compute a threshold mask image based on local pixel neighborhood and apply it to image.

Also known as adaptive or dynamic thresholding. The threshold value is the weighted mean for the local neighborhood of a pixel subtracted by a constant.

Supported methods:

- GAUSSIAN

- MEAN

- MEDIAN

- WOLF

- SINGH

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | image | image struct (Image schema) |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| blockSize | int | 170 | Odd size of pixel neighborhood which is used to calculate the threshold value (e.g. 3, 5, 7, …, 21, …). |

| method | AdaptiveThresholdingMethod | AdaptiveThresholdingMethod.GAUSSIAN | Method used to determine adaptive threshold for local neighbourhood in weighted mean image. |

| offset | int | Constant subtracted from weighted mean of neighborhood to calculate the local threshold value. Default offset is 0. | |

| mode | string | The mode parameter determines how the array borders are handled, where cval is the value when mode is equal to ‘constant’ | |

| cval | int | Value to fill past edges of input if mode is ‘constant’. |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | binarized_image | image struct (Image schema) |

Example:

from pyspark.ml import PipelineModel

from sparkocr.transformers import *

from sparkocr.utils import display_image

imagePath = "path to image"

# Read image file as binary file

df = spark.read

.format("binaryFile")

.load(imagePath)

binary_to_image = BinaryToImage() \

.setInputCol("content") \

.setOutputCol("image")

adaptive_thresholding = ImageAdaptiveThresholding() \

.setInputCol("scaled_image") \

.setOutputCol("binarized_image") \

.setBlockSize(21) \

.setOffset(73)

pipeline = PipelineModel(stages=[

binary_to_image,

adaptive_thresholding

])

result = pipeline.transform(df)

for r in result.select("image", "corrected_image").collect():

display_image(r.image)

display_image(r.corrected_image)

// Implemented only for Python

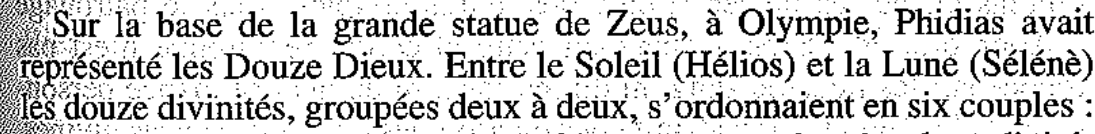

Original image:

Binarized image:

ImageScaler

ImageScaler scales image by provided scale factor or needed output size.

It supports keeping original ratio of image by padding the image in case fixed output size.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | image | image struct (Image schema) |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| scaleFactor | double | 1.0 | scale factor |

| keepRatio | boolean | false | Keep original ratio of image |

| width | int | 0 | Output width of image |

| height | int | 0 | Outpu height of imgae |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | scaled_image | scaled image struct (Image schema) |

Example:

from sparkocr.transformers import *

imagePath = "path to image"

# Read image file as binary file

df = spark.read \

.format("binaryFile") \

.load(imagePath) \

.asImage("image")

transformer = ImageScaler() \

.setInputCol("image") \

.setOutputCol("scaled_image") \

.setScaleFactor(0.5)

data = transformer.transform(df)

data.show()

import com.johnsnowlabs.ocr.transformers.ImageScaler

import com.johnsnowlabs.ocr.OcrContext.implicits._

val imagePath = "path to image"

// Read image file as binary file

val df = spark.read

.format("binaryFile")

.load(imagePath)

.asImage("image")

val transformer = new ImageScaler()

.setInputCol("image")

.setOutputCol("scaled_image")

.setScaleFactor(0.5)

val data = transformer.transform(df)

data.storeImage("scaled_image")

ImageAdaptiveScaler

ImageAdaptiveScaler detects font size and scales image for have desired font size.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | image | image struct (Image schema) |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| desiredSize | int | 34 | desired size of font in pixels |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | scaled_image | scaled image struct (Image schema) |

Example:

from sparkocr.transformers import *

imagePath = "path to image"

# Read image file as binary file

df = spark.read \

.format("binaryFile") \

.load(imagePath) \

.asImage("image")

transformer = ImageAdaptiveScaler() \

.setInputCol("image") \

.setOutputCol("scaled_image") \

.setDesiredSize(34)

data = transformer.transform(df)

data.show()

import com.johnsnowlabs.ocr.transformers.ImageAdaptiveScaler

import com.johnsnowlabs.ocr.OcrContext.implicits._

val imagePath = "path to image"

// Read image file as binary file

val df = spark.read

.format("binaryFile")

.load(imagePath)

.asImage("image")

val transformer = new ImageAdaptiveScaler()

.setInputCol("image")

.setOutputCol("scaled_image")

.setDesiredSize(34)

val data = transformer.transform(df)

data.storeImage("scaled_image")

ImageSkewCorrector

ImageSkewCorrector detects skew of the image and rotates it.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | image | image struct (Image schema) |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| rotationAngle | double | 0.0 | rotation angle |

| automaticSkewCorrection | boolean | true | enables/disables adaptive skew correction |

| halfAngle | double | 5.0 | half the angle(in degrees) that will be considered for correction |

| resolution | double | 1.0 | The step size(in degrees) that will be used for generating correction angle candidates |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | corrected_image | corrected image struct (Image schema) |

Example:

from pyspark.ml import PipelineModel

from sparkocr.transformers import *

from sparkocr.utils import display_images

imagePath = "path to image"

# Read image file as binary file

df = spark.read \

.format("binaryFile") \

.load(imagePath)

binary_to_image = BinaryToImage() \

.setInputCol("content") \

.setOutputCol("image")

skew_corrector = ImageSkewCorrector() \

.setInputCol("image") \

.setOutputCol("corrected_image") \

.setAutomaticSkewCorrection(True)

# Define pipeline

pipeline = PipelineModel(stages=[

binary_to_image,

skew_corrector

])

data = pipeline.transform(df)

display_images(data, "corrected_image")

import com.johnsnowlabs.ocr.transformers.ImageSkewCorrector

import com.johnsnowlabs.ocr.OcrContext.implicits._

val imagePath = "path to image"

// Read image file as binary file

val df = spark.read

.format("binaryFile")

.load(imagePath)

.asImage("image")

val transformer = new ImageSkewCorrector()

.setInputCol("image")

.setOutputCol("corrected_image")

.setAutomaticSkewCorrection(true)

val data = transformer.transform(df)

data.storeImage("corrected_image")

Original image:

Corrected image:

ImageNoiseScorer

ImageNoiseScorer computes noise score for each region.

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | image | image struct (Image schema) |

| inputRegionsCol | string | regions | regions |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| method | NoiseMethod string | NoiseMethod.RATIO | method of computation noise score |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | noisescores | noise score for each region |

Example:

from pyspark.ml import PipelineModel

from sparkocr.transformers import *

from sparkocr.enums import NoiseMethod

imagePath = "path to image"

# Read image file as binary file

df = spark.read \

.format("binaryFile") \

.load(imagePath) \

.asImage("image")

# Define transformer for detect regions

layoutAnalyzer = ImageLayoutAnalyzer() \

.setInputCol("image") \

.setOutputCol("regions")

# Define transformer for compute noise level for each region

noisescorer = ImageNoiseScorer() \

.setInputCol("image") \

.setOutputCol("noiselevel") \

.setInputRegionsCol("regions") \

.setMethod(NoiseMethod.VARIANCE)

# Define pipeline

pipeline = Pipeline()

pipeline.setStages(Array(

layoutAnalyzer,

noisescorer

))

data = pipeline.transform(df)

data.select("path", "noiselevel").show()

import org.apache.spark.ml.Pipeline

import com.johnsnowlabs.ocr.transformers.{ImageNoiseScorer, ImageLayoutAnalyzer}

import com.johnsnowlabs.ocr.NoiseMethod

import com.johnsnowlabs.ocr.OcrContext.implicits._

val imagePath = "path to image"

// Read image file as binary file

val df = spark.read

.format("binaryFile")

.load(imagePath)

.asImage("image")

// Define transformer for detect regions

val layoutAnalyzer = new ImageLayoutAnalyzer()

.setInputCol("image")

.setOutputCol("regions")

// Define transformer for compute noise level for each region

val noisescorer = new ImageNoiseScorer()

.setInputCol("image")

.setOutputCol("noiselevel")

.setInputRegionsCol("regions")

.setMethod(NoiseMethod.VARIANCE)

// Define pipeline

val pipeline = new Pipeline()

pipeline.setStages(Array(

layoutAnalyzer,

noisescorer

))

val modelPipeline = pipeline.fit(spark.emptyDataFrame)

val data = modelPipeline.transform(df)

data.select("path", "noiselevel").show()

Output:

+------------------+-----------------------------------------------------------------------------+

|path |noiselevel |

+------------------+-----------------------------------------------------------------------------+

|file:./noisy.png |[32.01805641767766, 32.312916551193354, 29.99257352247787, 30.62470388308217]|

+------------------+-----------------------------------------------------------------------------+

ImageRemoveObjects

python only

ImageRemoveObjects to remove background objects.

It supports removing:

- objects less than elements of font with minSizeFont size

- objects less than minSizeObject

- holes less than minSizeHole

- objects more than maxSizeObject

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | None | image struct (Image schema) |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| minSizeFont | int | 10 | Min size font in pt. |

| minSizeObject | int | None | Min size of object which will keep on image [*]. |

| connectivityObject | int | 0 | The connectivity defining the neighborhood of a pixel. |

| minSizeHole | int | None | Min size of hole which will keep on image[ *]. |

| connectivityHole | int | 0 | The connectivity defining the neighborhood of a pixel. |

| maxSizeObject | int | None | Max size of object which will keep on image [*]. |

| connectivityMaxObject | int | 0 | The connectivity defining the neighborhood of a pixel. |

[*] : None value disables removing objects.

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| outputCol | string | None | scaled image struct (Image schema) |

Example:

from pyspark.ml import PipelineModel

from sparkocr.transformers import *

imagePath = "path to image"

# Read image file as binary file

df = spark.read

.format("binaryFile")

.load(imagePath)

binary_to_image = BinaryToImage() \

.setInputCol("content") \

.setOutputCol("image")

remove_objects = ImageRemoveObjects() \

.setInputCol("image") \

.setOutputCol("corrected_image") \

.setMinSizeObject(20)

pipeline = PipelineModel(stages=[

binary_to_image,

remove_objects

])

data = pipeline.transform(df)

// Implemented only for Python

ImageMorphologyOperation

python only

ImageMorphologyOperationis a transformer for applying morphological operations to image.

It supports following operation:

- Erosion

- Dilation

- Opening

- Closing

Input Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|

| inputCol | string | None | image struct (Image schema) |

Parameters

| Param name | Type | Default | Description |

|---|---|---|---|

| operation | MorphologyOperationType | MorphologyOperationType.OPENING | Operation type |

| kernelShape | KernelShape | KernelShape.DISK | Kernel shape. |

| kernelSize | int | 1 | Kernel size in pixels. |

Output Columns

| Param name | Type | Default | Column Data Description |

|---|---|---|---|