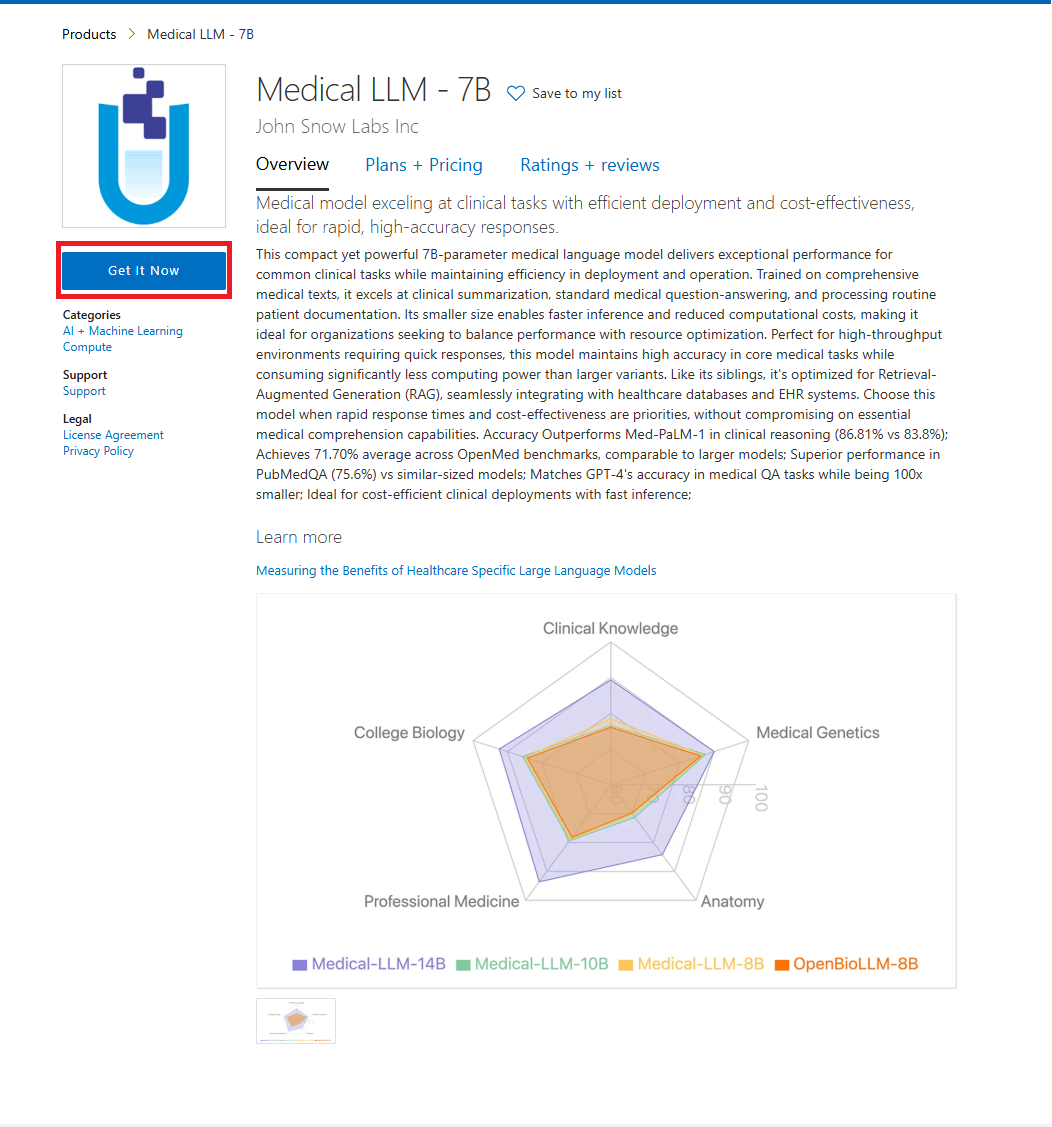

The list below shows John Snow Labs’ Medical LLM models available on Azure marketplace.

All LLMs on Azure are Open AI compatible.

Deployment Instructions

-

Subscribe to the Product from the models listing page using the

Get It Nowbutton.

-

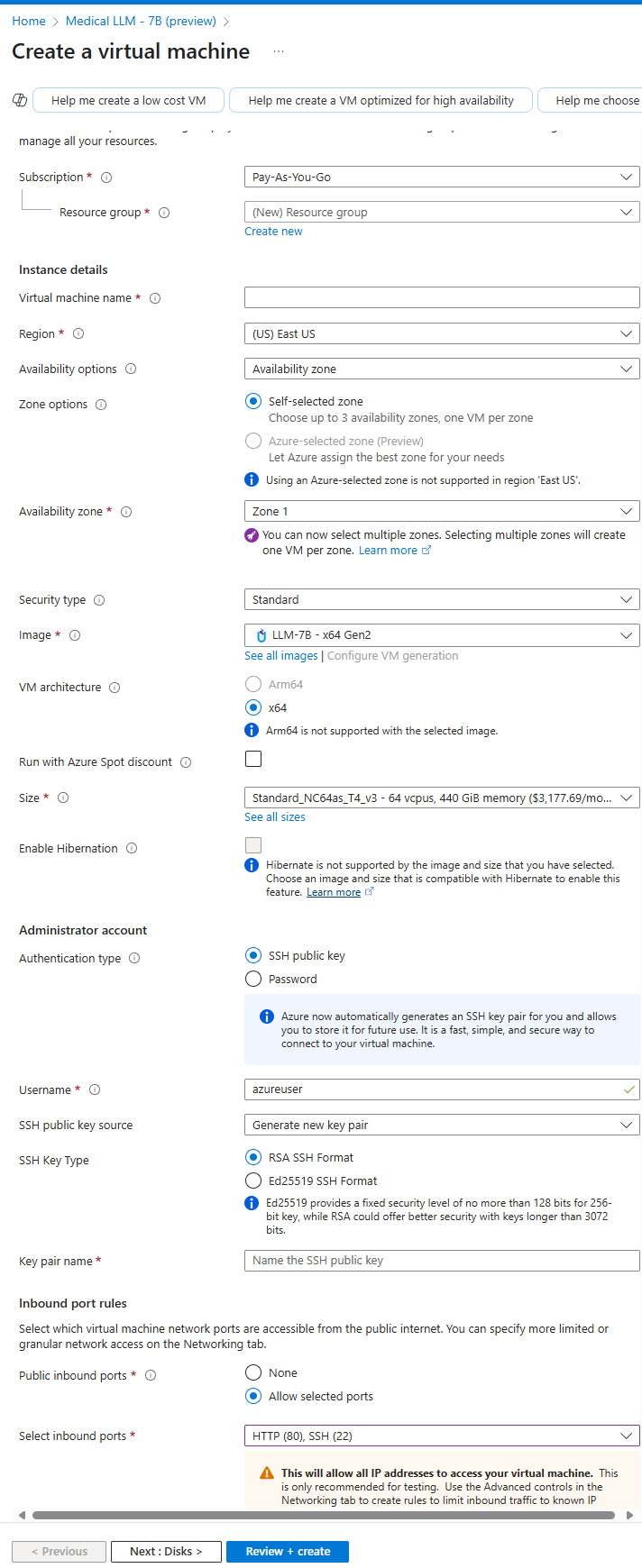

Create a virtual machine of the product. Make sure port 80 is open for inbound requests.

Optional, open port 3000 for Open Web UI interface.

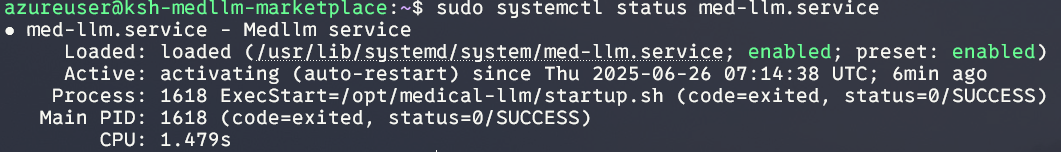

- Wait for the services to be active. This might take few minutes for the initial boot.

To check the status, login to the instance and run this command

sudo systemctl status med-llm.service

-

Once all the status is active, access the model api docs from http://INSTANCE_IP/docs

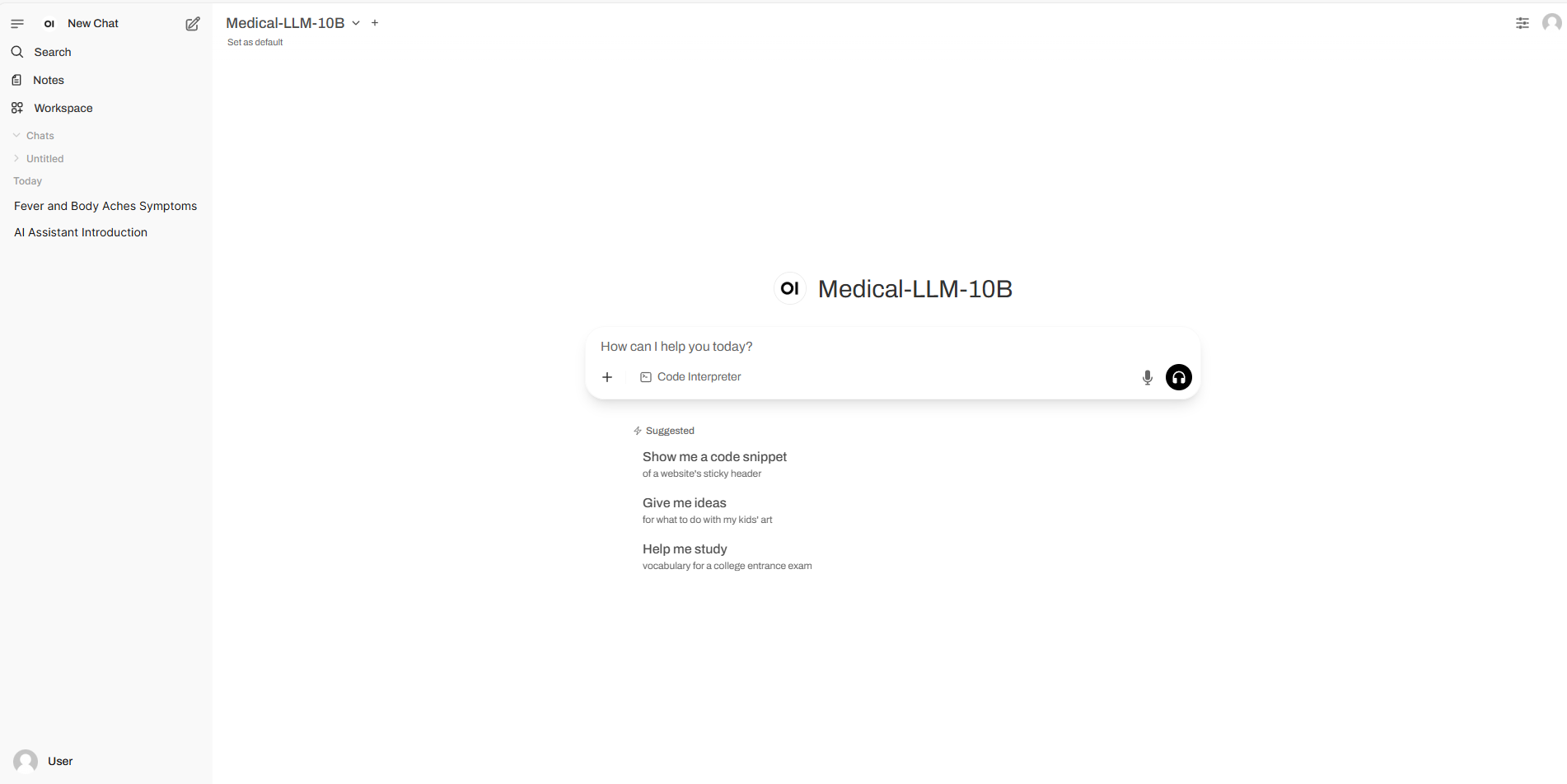

- Open WebUI hosted on port 3000. You can also interact with the model from here.

Model Interactions

Once deployed, the container exposes a RESTful API for model interactions.

Chat Completions

Use this endpoint for multi-turn conversational interactions (e.g., clinical assistants).

- Endpoint:

/v1/chat/completions - Method: POST

- Example Request:

payload = {

"model": "Medical-LLM-Small",

"messages": [

{"role": "system", "content": "You are a professional medical assistant"},

{"role": "user", "content": "Explain symptoms of chronic fatigue syndrome"}

],

"temperature": 0.7,

"max_tokens": 1024

}

Text Completions

Use this endpoint for single-turn prompts or generating long-form medical text.

- Endpoint:

/v1/completions - Method: POST

- Example Request:

payload = { "model": "Medical-LLM-Small", "prompt": "Provide a detailed explanation of rheumatoid arthritis treatment", "temperature": 0.7, "max_tokens": 4096 }

PREVIOUSDeploy on AWS