Description

This model is based on a Visual Transformer (ViT) architecture, specifically designed to perform classification tasks on DICOM images. Trained in-house with a diverse range of corpora, including DICOM data, COCO images, and in-house annotated documents, the model is capable of accurately classifying medical images and associated document notes.

By leveraging the power of Visual Transformers, the model captures complex relationships between image content and textual information, making it highly effective for analyzing both visual features and document annotations. Once images are classified, you can further integrate Visual NLP techniques to extract meaningful information, utilizing both the layout and textual features present in the document.

This model provides a comprehensive solution for tasks involving medical image classification, document analysis, and structured information extraction, making it ideal for healthcare applications, research, and document management systems.

Predicted Entities

'image', 'document_notes', 'others'

How to use

document_assembler = nlp.ImageAssembler() \

.setInputCol("image") \

.setOutputCol("image_assembler")

imageClassifier_loaded = nlp.ViTForImageClassification.pretrained("visualclf_vit_dicom", "en", "clinical/ocr")\

.setInputCols(["image_assembler"])\

.setOutputCol("class")

pipeline = nlp.Pipeline().setStages([

document_assembler,

imageClassifier_loaded

])

test_image = spark.read\

.format("image")\

.option("dropInvalid", value = True)\

.load("./dicom.JPEG")

result = pipeline.fit(test_image).transform(test_image)

result.select("class.result").show(1, False)

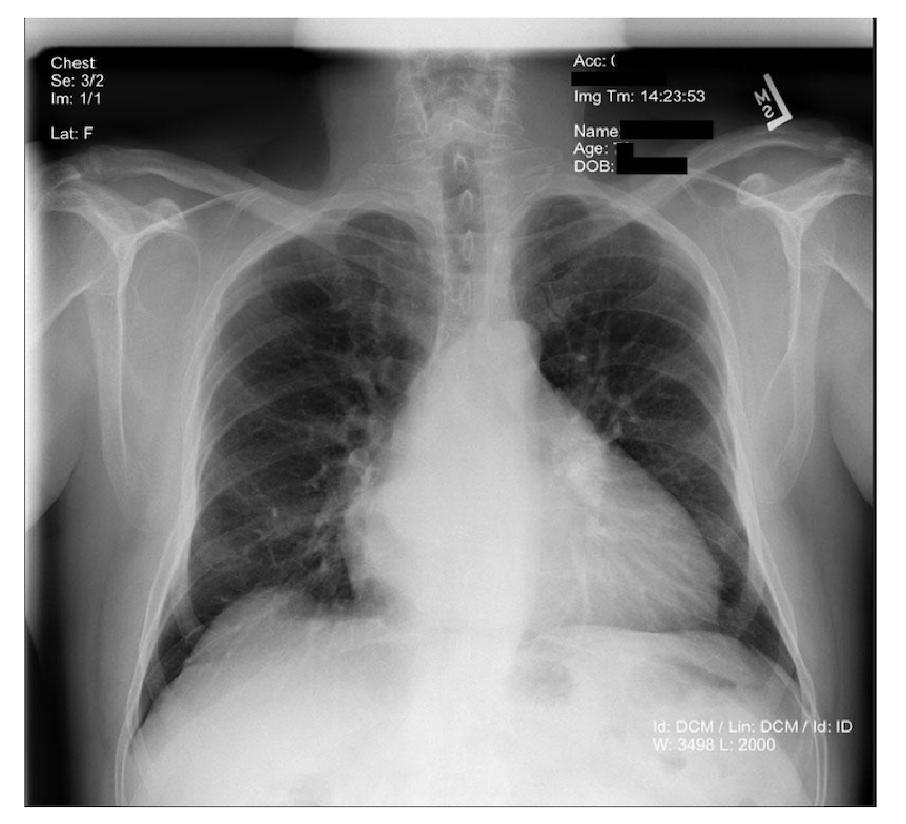

Example

Input:

Output text

+-------+

|label |

+-------+

|image |

+-------+

Model Information

| Model Name: | visualclf_vit_dicom |

| Compatibility: | Healthcare NLP 5.0.1+ |

| License: | Licensed |

| Edition: | Official |

| Input Labels: | [image_assembler] |

| Output Labels: | [class] |

| Language: | en |

| Size: | 321.6 MB |